Go to the source code of this file.

Macros | |

| #define | OBSOLETE_WORKAROUNDS 0 |

| #define | PERF_EVENTS_OPENED 0x01 |

| #define | PERF_EVENTS_RUNNING 0x02 |

| #define | HANDLE_STRING_ERROR {fprintf(stderr,"%s:%i unexpected string function error.\n",__FILE__,__LINE__); exit(-1);} |

| #define | PAPI_REFRESH_VALUE 1 |

| #define | READ_BUFFER_SIZE (3 + (2 * PERF_EVENT_MAX_MPX_COUNTERS)) |

Variables | |

| papi_vector_t | _perf_event_vector |

| struct native_event_table_t | perf_native_event_table |

| static int | our_cidx |

| static int | exclude_guest_unsupported |

Macro Definition Documentation

◆ HANDLE_STRING_ERROR

| #define HANDLE_STRING_ERROR {fprintf(stderr,"%s:%i unexpected string function error.\n",__FILE__,__LINE__); exit(-1);} |

Definition at line 70 of file perf_event.c.

◆ OBSOLETE_WORKAROUNDS

| #define OBSOLETE_WORKAROUNDS 0 |

Definition at line 61 of file perf_event.c.

◆ PAPI_REFRESH_VALUE

| #define PAPI_REFRESH_VALUE 1 |

Definition at line 88 of file perf_event.c.

◆ PERF_EVENTS_OPENED

| #define PERF_EVENTS_OPENED 0x01 |

Definition at line 64 of file perf_event.c.

◆ PERF_EVENTS_RUNNING

| #define PERF_EVENTS_RUNNING 0x02 |

Definition at line 65 of file perf_event.c.

◆ READ_BUFFER_SIZE

| #define READ_BUFFER_SIZE (3 + (2 * PERF_EVENT_MAX_MPX_COUNTERS)) |

Definition at line 500 of file perf_event.c.

Function Documentation

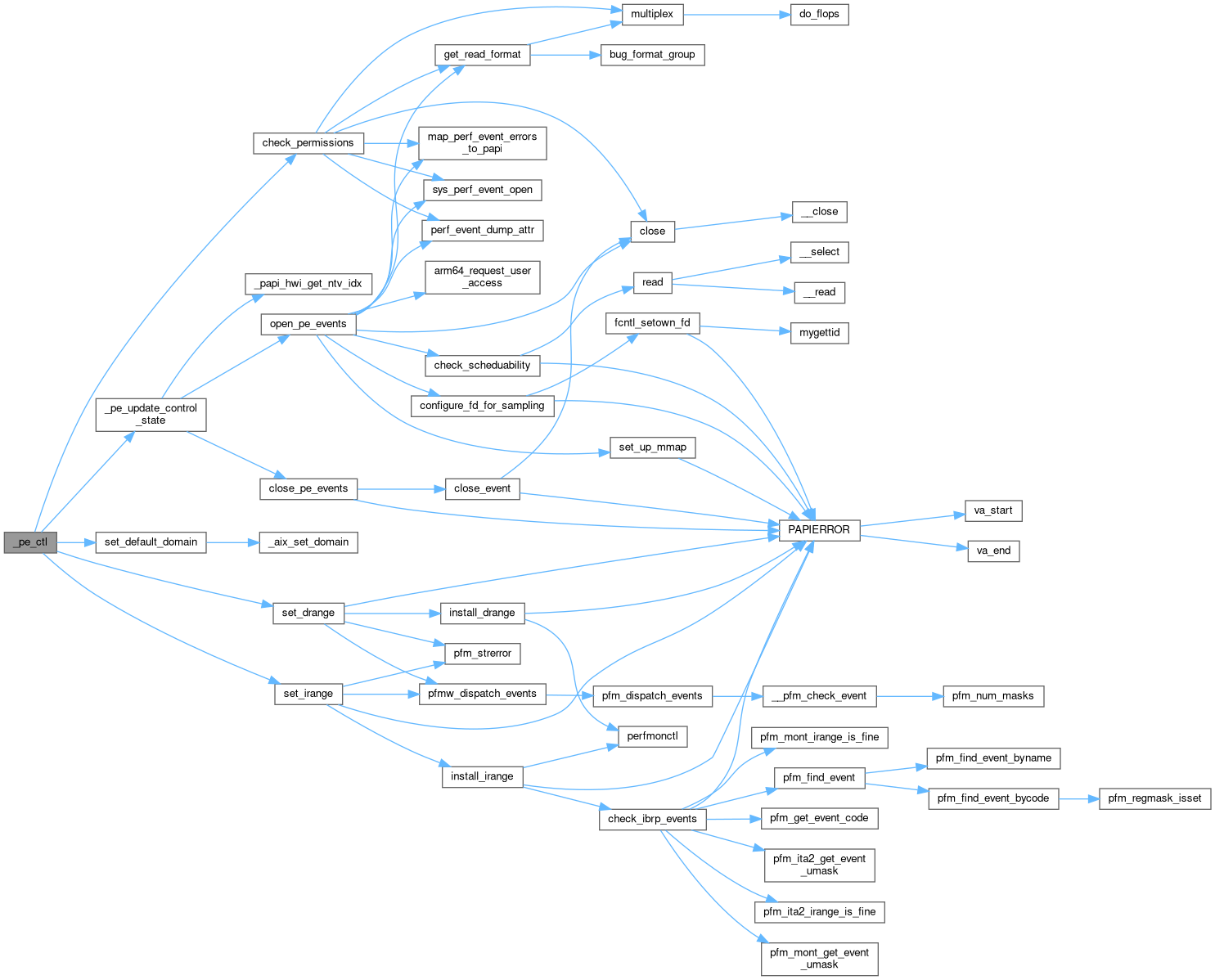

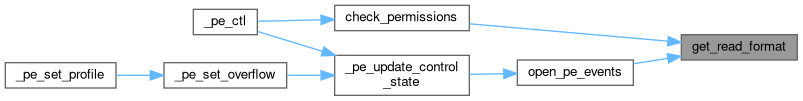

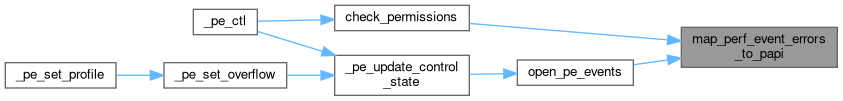

◆ _pe_ctl()

|

static |

Definition at line 1670 of file perf_event.c.

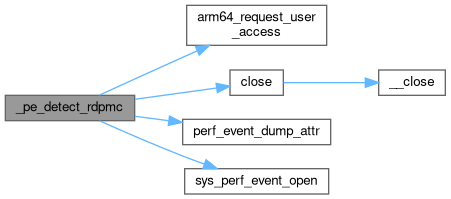

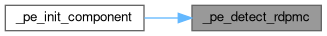

◆ _pe_detect_rdpmc()

|

static |

Definition at line 2357 of file perf_event.c.

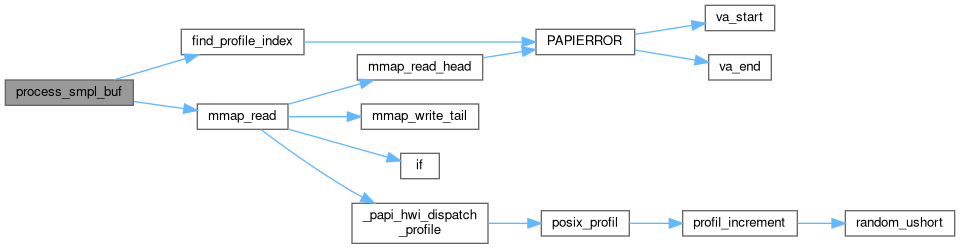

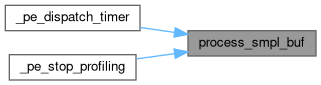

◆ _pe_dispatch_timer()

|

static |

Definition at line 1969 of file perf_event.c.

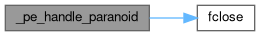

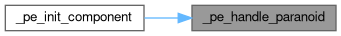

◆ _pe_handle_paranoid()

|

static |

Definition at line 2446 of file perf_event.c.

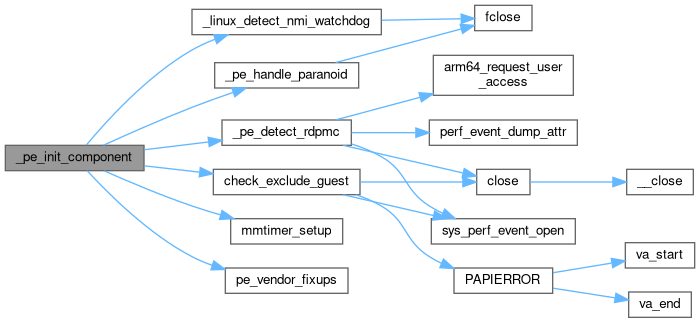

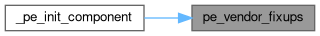

◆ _pe_init_component()

Definition at line 2536 of file perf_event.c.

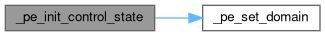

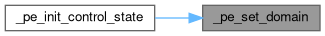

◆ _pe_init_control_state()

|

static |

Definition at line 1843 of file perf_event.c.

◆ _pe_init_thread()

|

static |

Definition at line 1034 of file perf_event.c.

◆ _pe_ntv_code_to_descr()

Definition at line 1895 of file perf_event.c.

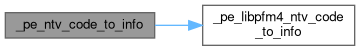

◆ _pe_ntv_code_to_info()

|

static |

Definition at line 1904 of file perf_event.c.

◆ _pe_ntv_code_to_name()

Definition at line 1886 of file perf_event.c.

◆ _pe_ntv_enum_events()

Definition at line 1872 of file perf_event.c.

◆ _pe_ntv_name_to_code()

Definition at line 1879 of file perf_event.c.

◆ _pe_rdpmc_read()

|

static |

Definition at line 1126 of file perf_event.c.

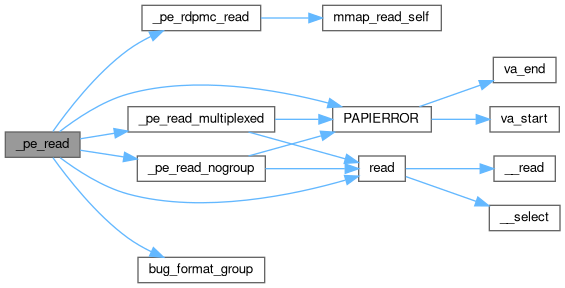

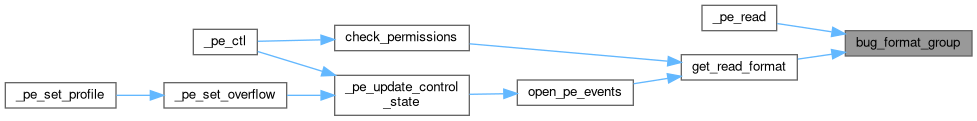

◆ _pe_read()

|

static |

Definition at line 1295 of file perf_event.c.

◆ _pe_read_multiplexed()

|

static |

Definition at line 1185 of file perf_event.c.

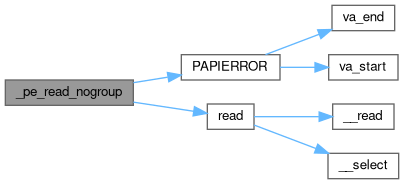

◆ _pe_read_nogroup()

|

static |

Definition at line 1257 of file perf_event.c.

◆ _pe_reset()

|

static |

Definition at line 1057 of file perf_event.c.

◆ _pe_set_domain()

|

static |

Definition at line 1008 of file perf_event.c.

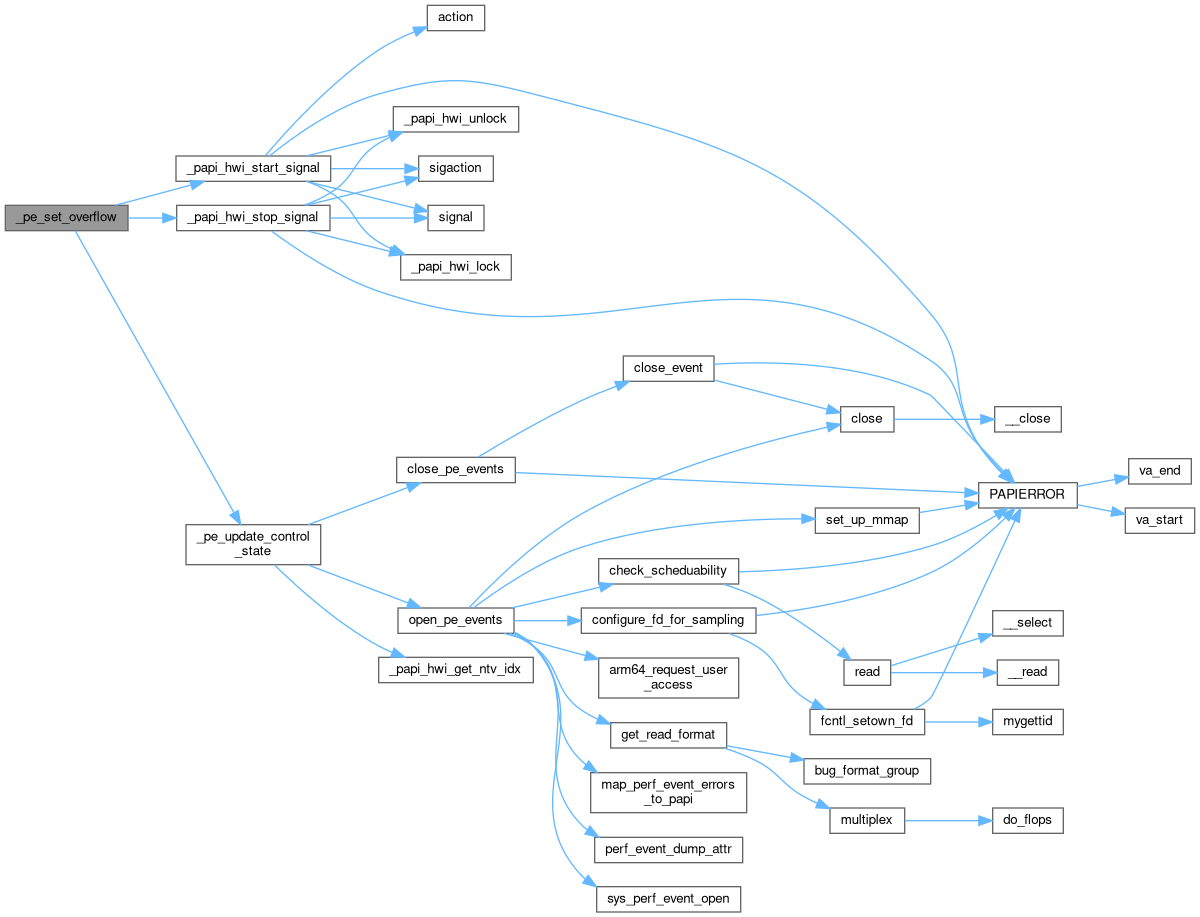

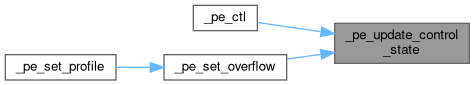

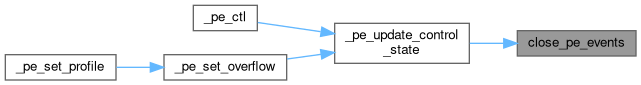

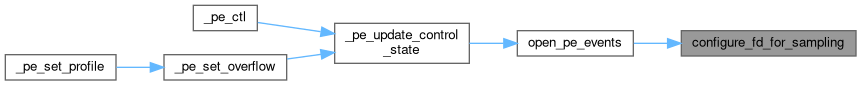

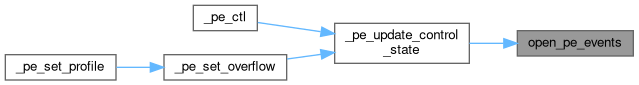

◆ _pe_set_overflow()

|

static |

Definition at line 2152 of file perf_event.c.

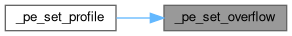

◆ _pe_set_profile()

|

static |

Definition at line 2251 of file perf_event.c.

◆ _pe_shutdown_component()

|

static |

Definition at line 2321 of file perf_event.c.

◆ _pe_shutdown_thread()

|

static |

Definition at line 1023 of file perf_event.c.

◆ _pe_start()

|

static |

Definition at line 1448 of file perf_event.c.

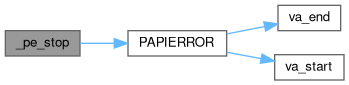

◆ _pe_stop()

|

static |

Definition at line 1498 of file perf_event.c.

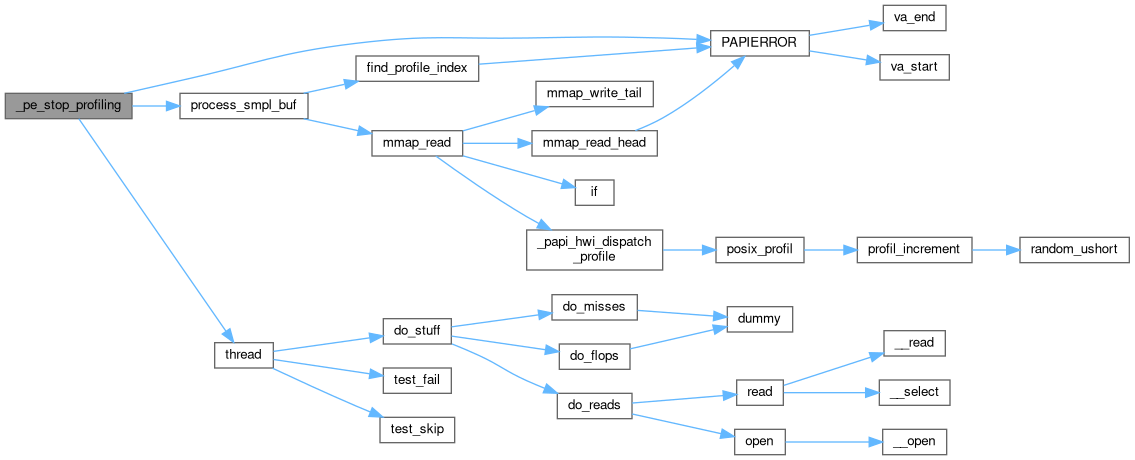

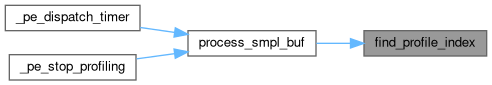

◆ _pe_stop_profiling()

|

static |

Definition at line 2120 of file perf_event.c.

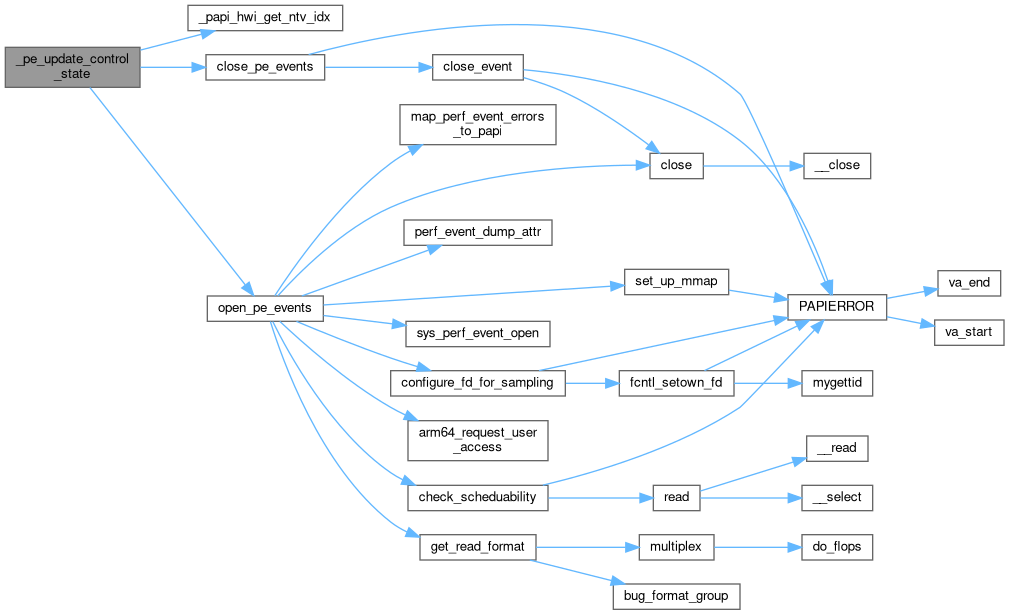

◆ _pe_update_control_state()

|

static |

Definition at line 1540 of file perf_event.c.

◆ _pe_write()

|

static |

Definition at line 1092 of file perf_event.c.

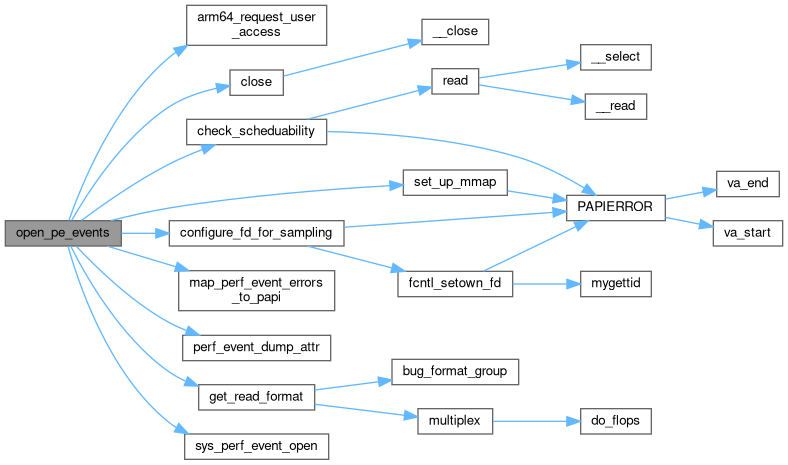

◆ arm64_request_user_access()

|

inlinestatic |

Definition at line 686 of file perf_event.c.

◆ bug_format_group()

|

static |

Definition at line 181 of file perf_event.c.

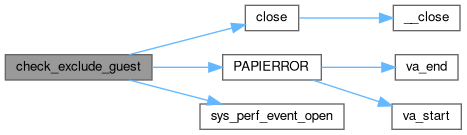

◆ check_exclude_guest()

| void check_exclude_guest | ( | void | ) |

Definition at line 278 of file perf_event.c.

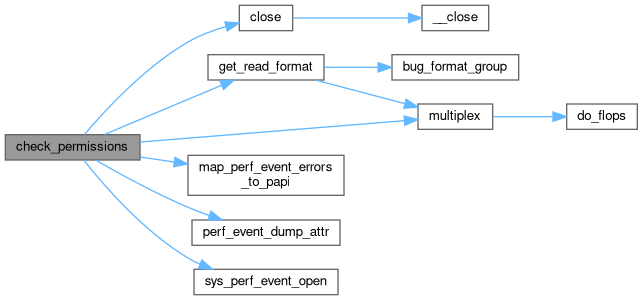

◆ check_permissions()

|

static |

Check if the current set of options is supported by

Definition at line 439 of file perf_event.c.

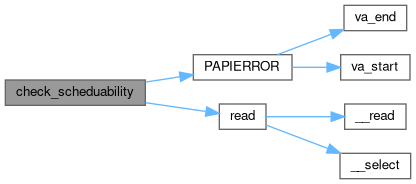

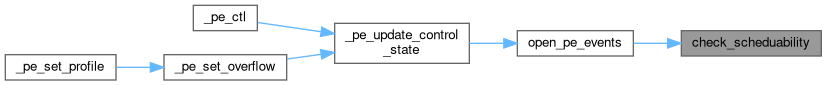

◆ check_scheduability()

|

static |

Definition at line 510 of file perf_event.c.

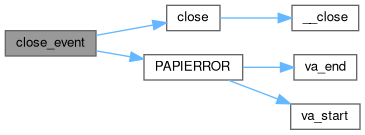

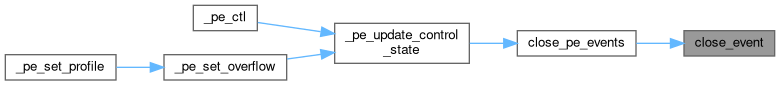

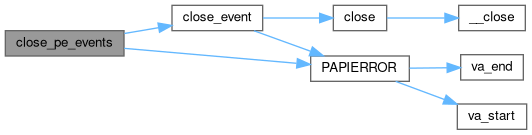

◆ close_event()

|

static |

Definition at line 904 of file perf_event.c.

◆ close_pe_events()

|

static |

Definition at line 938 of file perf_event.c.

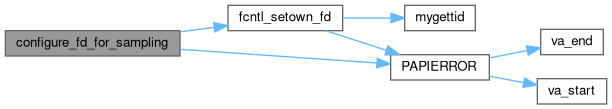

◆ configure_fd_for_sampling()

|

static |

Definition at line 585 of file perf_event.c.

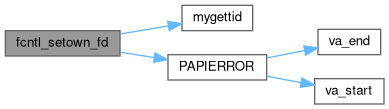

◆ fcntl_setown_fd()

Definition at line 222 of file perf_event.c.

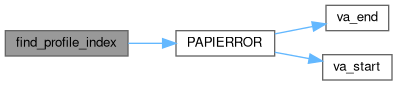

◆ find_profile_index()

|

static |

Definition at line 1917 of file perf_event.c.

◆ get_read_format()

|

static |

Definition at line 247 of file perf_event.c.

◆ map_perf_event_errors_to_papi()

Definition at line 389 of file perf_event.c.

◆ open_pe_events()

|

static |

Definition at line 693 of file perf_event.c.

◆ pe_vendor_fixups()

|

static |

Definition at line 117 of file perf_event.c.

◆ perf_event_dump_attr()

|

static |

Definition at line 325 of file perf_event.c.

◆ process_smpl_buf()

|

static |

Definition at line 1944 of file perf_event.c.

◆ set_up_mmap()

|

static |

Definition at line 631 of file perf_event.c.

Variable Documentation

◆ _perf_event_vector

| papi_vector_t _perf_event_vector |

Definition at line 73 of file perf_event.c.

◆ exclude_guest_unsupported

|

static |

Definition at line 78 of file perf_event.c.

◆ our_cidx

|

static |

Definition at line 77 of file perf_event.c.

◆ perf_native_event_table

| struct native_event_table_t perf_native_event_table |

Definition at line 76 of file perf_event.c.