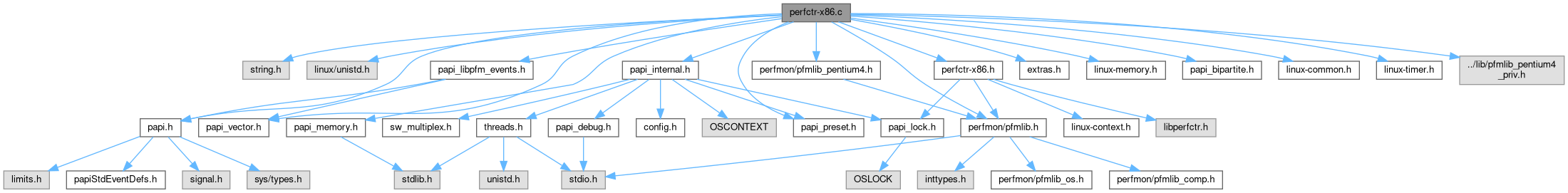

Go to the source code of this file.

Macros | |

| #define | P4_VEC "SSE" |

| #define | P4_FPU " X87 SSE_DP" |

| #define | AMD_FPU "SPECULATIVE" |

| #define | P4_REPLAY_REAL_MASK 0x00000003 |

Variables | |

| papi_mdi_t | _papi_hwi_system_info |

| papi_vector_t | _perfctr_vector |

| pentium4_escr_reg_t | pentium4_escrs [] |

| pentium4_cccr_reg_t | pentium4_cccrs [] |

| pentium4_event_t | pentium4_events [] |

| static pentium4_replay_regs_t | p4_replay_regs [] |

| static int | pfm2intel [] |

Macro Definition Documentation

◆ AMD_FPU

| #define AMD_FPU "SPECULATIVE" |

Definition at line 72 of file perfctr-x86.c.

◆ P4_FPU

| #define P4_FPU " X87 SSE_DP" |

Definition at line 61 of file perfctr-x86.c.

◆ P4_REPLAY_REAL_MASK

| #define P4_REPLAY_REAL_MASK 0x00000003 |

Definition at line 903 of file perfctr-x86.c.

◆ P4_VEC

| #define P4_VEC "SSE" |

Definition at line 51 of file perfctr-x86.c.

Function Documentation

◆ _bpt_map_avail()

|

static |

Definition at line 266 of file perfctr-x86.c.

◆ _bpt_map_exclusive()

|

static |

Definition at line 295 of file perfctr-x86.c.

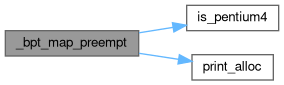

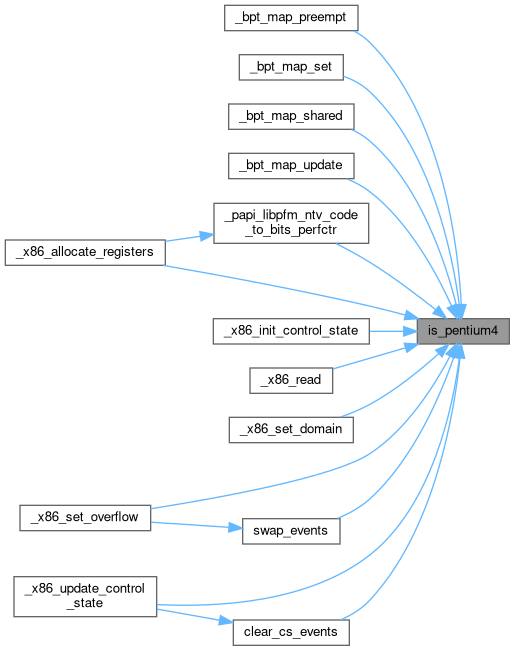

◆ _bpt_map_preempt()

|

static |

Definition at line 343 of file perfctr-x86.c.

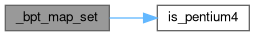

◆ _bpt_map_set()

|

static |

Definition at line 275 of file perfctr-x86.c.

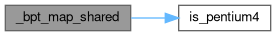

◆ _bpt_map_shared()

|

static |

Definition at line 305 of file perfctr-x86.c.

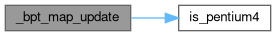

◆ _bpt_map_update()

|

static |

Definition at line 406 of file perfctr-x86.c.

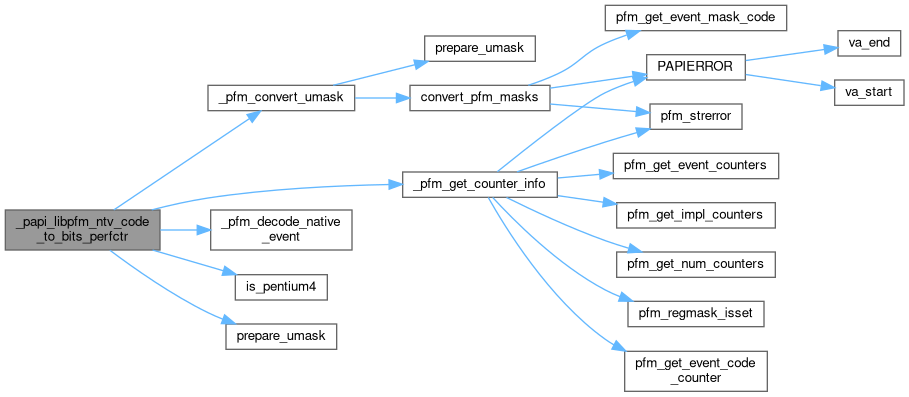

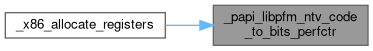

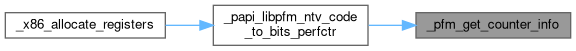

◆ _papi_libpfm_ntv_code_to_bits_perfctr()

| int _papi_libpfm_ntv_code_to_bits_perfctr | ( | unsigned int | EventCode, |

| hwd_register_t * | newbits | ||

| ) |

Definition at line 1015 of file perfctr-x86.c.

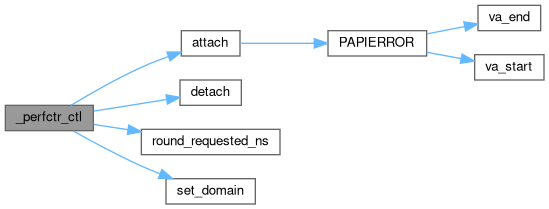

◆ _perfctr_ctl()

| int _perfctr_ctl | ( | hwd_context_t * | ctx, |

| int | code, | ||

| _papi_int_option_t * | option | ||

| ) |

Definition at line 295 of file perfctr.c.

◆ _perfctr_dispatch_timer()

| void _perfctr_dispatch_timer | ( | int | signal, |

| hwd_siginfo_t * | si, | ||

| void * | context | ||

| ) |

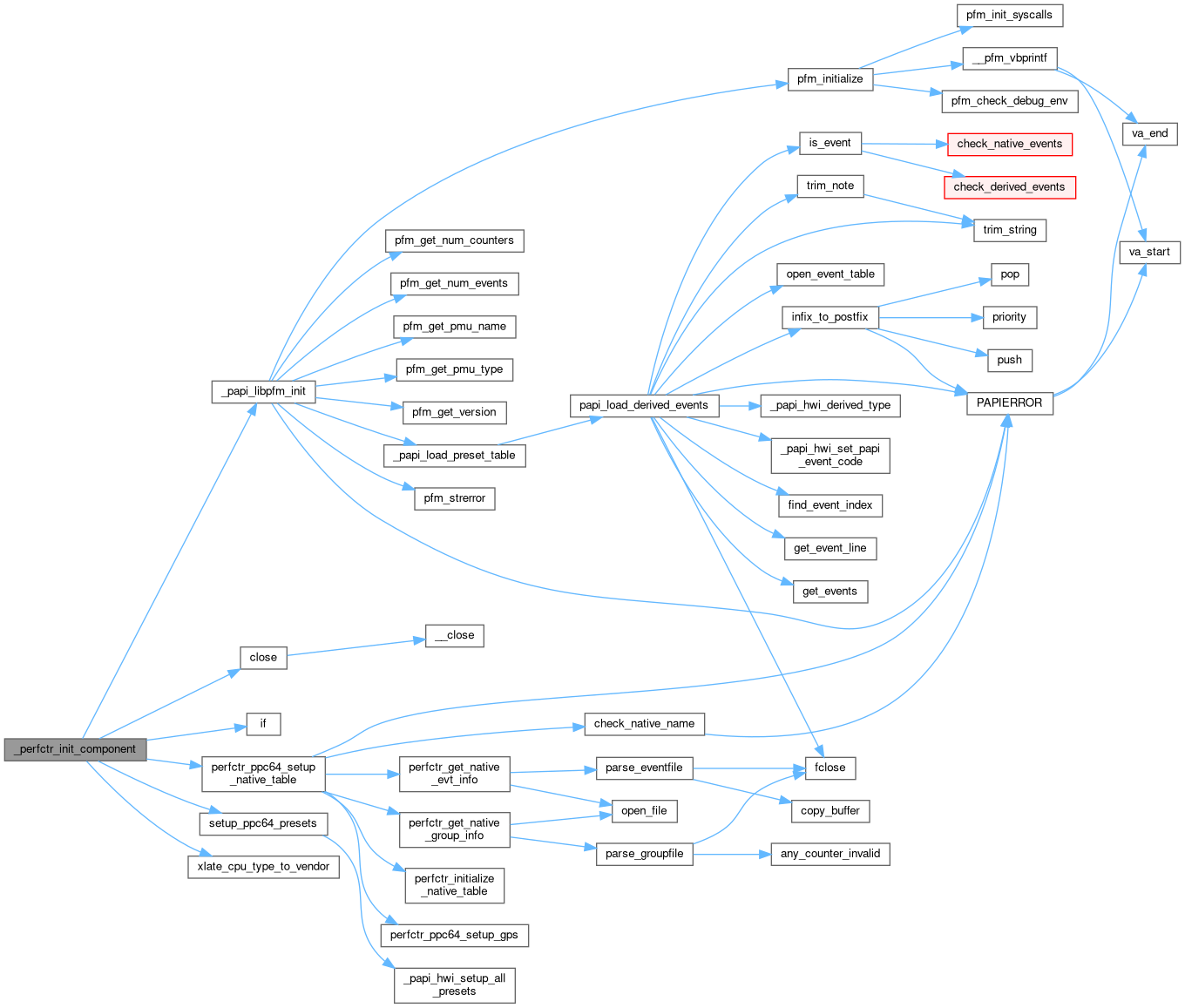

◆ _perfctr_init_component()

Definition at line 107 of file perfctr.c.

◆ _perfctr_init_thread()

| int _perfctr_init_thread | ( | hwd_context_t * | ctx | ) |

Definition at line 386 of file perfctr.c.

◆ _perfctr_shutdown_thread()

| int _perfctr_shutdown_thread | ( | hwd_context_t * | ctx | ) |

Definition at line 434 of file perfctr.c.

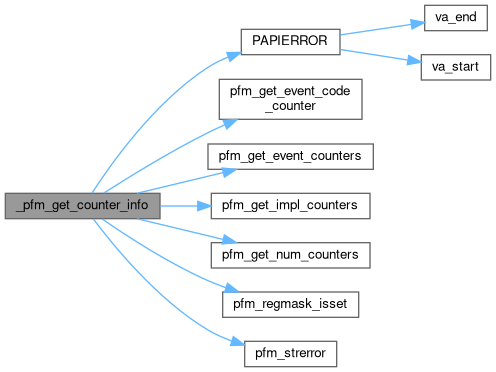

◆ _pfm_get_counter_info()

Definition at line 970 of file perfctr-x86.c.

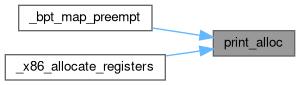

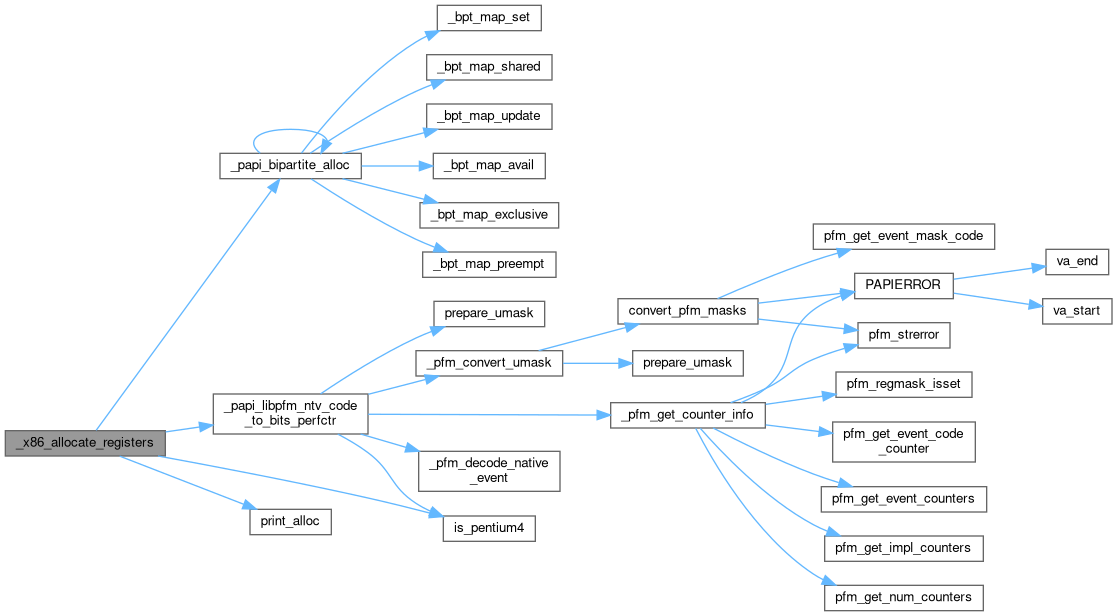

◆ _x86_allocate_registers()

|

static |

Definition at line 418 of file perfctr-x86.c.

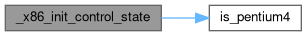

◆ _x86_init_control_state()

|

static |

Definition at line 119 of file perfctr-x86.c.

◆ _x86_read()

|

static |

Definition at line 701 of file perfctr-x86.c.

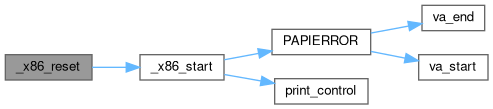

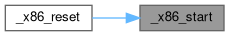

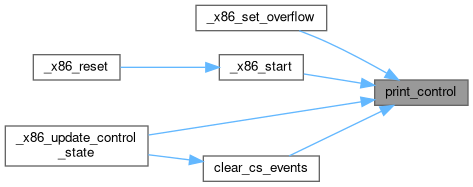

◆ _x86_reset()

|

static |

Definition at line 750 of file perfctr-x86.c.

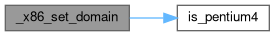

◆ _x86_set_domain()

| int _x86_set_domain | ( | hwd_control_state_t * | cntrl, |

| int | domain | ||

| ) |

Definition at line 210 of file perfctr-x86.c.

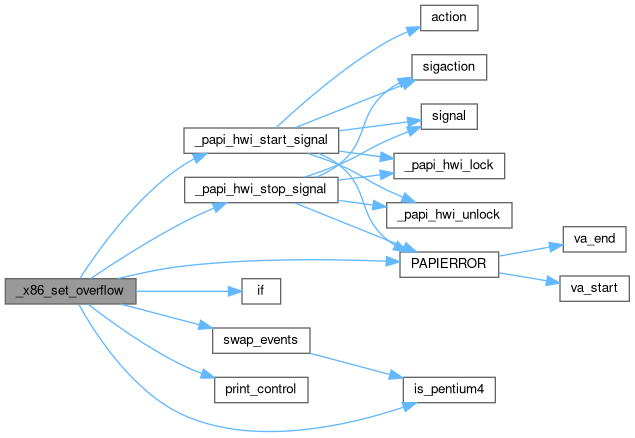

◆ _x86_set_overflow()

|

static |

Definition at line 805 of file perfctr-x86.c.

◆ _x86_start()

|

static |

Definition at line 653 of file perfctr-x86.c.

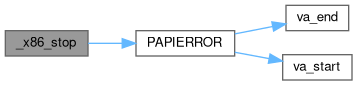

◆ _x86_stop()

|

static |

Definition at line 679 of file perfctr-x86.c.

◆ _x86_stop_profiling()

|

static |

Definition at line 890 of file perfctr-x86.c.

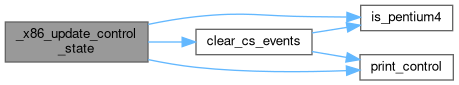

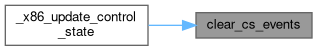

◆ _x86_update_control_state()

|

static |

Definition at line 550 of file perfctr-x86.c.

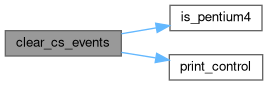

◆ clear_cs_events()

|

static |

Definition at line 504 of file perfctr-x86.c.

◆ is_pentium4()

|

inlinestatic |

Definition at line 75 of file perfctr-x86.c.

◆ print_alloc()

|

static |

◆ print_control()

| void print_control | ( | const struct perfctr_cpu_control * | control | ) |

Definition at line 96 of file perfctr-x86.c.

◆ swap_events()

|

static |

Definition at line 762 of file perfctr-x86.c.

Variable Documentation

◆ _papi_hwi_system_info

|

extern |

Definition at line 56 of file papi_internal.c.

◆ _perfctr_vector

| papi_vector_t _perfctr_vector |

Definition at line 1163 of file perfctr-x86.c.

◆ p4_replay_regs

|

static |

Definition at line 910 of file perfctr-x86.c.

◆ pentium4_cccrs

|

extern |

pentium4_cccrs

Array of counter-configuration-control registers that are available on Pentium4.

Definition at line 242 of file pentium4_events.h.

◆ pentium4_escrs

|

extern |

pentium4_escrs

Array of event-selection-control registers that are available on Pentium4.

Definition at line 51 of file pentium4_events.h.

◆ pentium4_events

|

extern |

pentium4_events

Array of events that can be counted on Pentium4.

Definition at line 607 of file pentium4_events.h.

◆ pfm2intel

|

static |

Definition at line 958 of file perfctr-x86.c.