503{

504#define HAS_OPTIONS(x) (cntrs && (cntrs[x].flags || cntrs[x].cnt_mask))

505#define is_fixed_pmc(a) (a == 16 || a == 17 || a == 18)

506#define is_uncore(a) (a > 19)

507

514 uint64_t val, unc_global_ctrl;

515 uint64_t pebs_mask, ld_mask;

516 unsigned long long fixed_ctr;

517 unsigned int plm;

518 unsigned int npc, npmc0, npmc01, nf2, nuf;

519 unsigned int i, n, k, j, umask, use_pebs = 0;

521 unsigned int next_gen, last_gen, u_flags;

522 unsigned int next_unc_gen, last_unc_gen, lat;

523 unsigned int offcore_rsp0_value = 0;

524 unsigned int offcore_rsp1_value = 0;

525

526 npc = npmc01 = npmc0 = nf2 = nuf = 0;

527 unc_global_ctrl = 0;

528

535 pebs_mask = ld_mask = 0;

538

541

542

543

544

545 for(

i=0;

i < n;

i++) {

546

547

548

551

553

554

556 DPRINT(

"MISPREDICTED_BRANCH_RETIRED broken on this Nehalem processor, see eeratum AAJ80\n");

558 }

559

560

561

562

565

568 DPRINT(

"events does not support unit mask combination\n");

570 }

571

572

573

575 if (++nuf > 1) {

576 DPRINT(

"two events compete for a UNCORE_FIXED_CTR0\n");

578 }

580 DPRINT(

"uncore fixed counter does not support options\n");

582 }

583 }

585 if (++npmc0 > 1) {

586 DPRINT(

"two events compete for a PMC0\n");

588 }

589 }

590

591

592

593

595 if (++npmc01 > 2) {

596 DPRINT(

"two events compete for a PMC0\n");

598 }

599 }

600

601

602

603

605 if (++nf2 > 1) {

606 DPRINT(

"two events compete for FIXED_CTR2\n");

608 }

610 DPRINT(

"UNHALTED_REFERENCE_CYCLES only accepts anythr filter\n");

612 }

613 }

614

615

616

617

618 umask = 0;

622 }

623

625 if (offcore_rsp0_value && offcore_rsp0_value != umask) {

626 DPRINT(

"all OFFCORE_RSP0 events must have the same unit mask\n");

628 }

630 DPRINT(

"OFFCORE_RSP0 register not available\n");

632 }

633 if (!((umask & 0xff) && (umask & 0xff00))) {

634 DPRINT(

"OFFCORE_RSP0 must have at least one request and response unit mask set\n");

636 }

637

638 offcore_rsp0_value = umask;

639 }

641 if (offcore_rsp1_value && offcore_rsp1_value != umask) {

642 DPRINT(

"all OFFCORE_RSP1 events must have the same unit mask\n");

644 }

646 DPRINT(

"OFFCORE_RSP1 register not available\n");

648 }

649 if (!((umask & 0xff) && (umask & 0xff00))) {

650 DPRINT(

"OFFCORE_RSP1 must have at least one request and response unit mask set\n");

652 }

653

654 offcore_rsp1_value = umask;

655 }

656

657

658

659

660

661

665 DPRINT(

"uncore events must have PLM0|PLM3\n");

667 }

668 }

669 }

670

671

672

673

676

677

678 next_gen = 0;

679 last_gen = 3;

680

681

682

683

684 if (nuf || npmc0) {

685 for(

i=0;

i < n;

i++) {

691 next_gen = 1;

692 }

697 }

698 }

699 }

700

701

702

703

704

705

706

707

708

709

710 if (npmc01) {

711 for(

i=0;

i < n;

i++) {

715 next_gen++;

716 if (next_gen == 2)

718 assign_pc[

i] = next_gen++;

719 }

720 }

721 }

722

723

724

725

726

727

728

729

730

731

732

734 if (fixed_ctr) {

735 for(

i=0;

i < n;

i++) {

736

737

738

739

742 continue;

743

745 continue;

746 }

749 fixed_ctr &= ~1;

750 }

753 fixed_ctr &= ~2;

754 }

757 fixed_ctr &= ~4;

758 }

759 }

760 }

761

762

763

764 next_unc_gen = 21;

765 last_unc_gen = 28;

766 for(

i=0;

i < n;

i++) {

769 for(; next_unc_gen <= last_unc_gen; next_unc_gen++) {

771 break;

772 }

773 if (next_unc_gen <= last_unc_gen)

774 assign_pc[

i] = next_unc_gen++;

775 else {

776 DPRINT(

"cannot assign generic uncore event\n");

778 }

779 }

780 }

781

782

783

784

785 for(

i=0;

i < n;

i++) {

786 if (assign_pc[

i] == -1) {

787 for(; next_gen <= last_gen; next_gen++) {

790 break;

791 }

792 if (next_gen <= last_gen) {

793 assign_pc[

i] = next_gen++;

794 } else {

795 DPRINT(

"cannot assign generic event\n");

797 }

798 }

799 }

800

801

802

803

805 for (

i=0;

i < n ;

i++ ) {

807 continue;

808 val = 0;

809

812 val |= 1ULL;

814 val |= 2ULL;

816 val |= 4ULL;

817 val |= 1ULL << 3;

818

819 reg.

val |= val << ((assign_pc[

i]-16)<<2);

820 }

821

827

828 __pfm_vbprintf(

"[FIXED_CTRL(pmc%u)=0x%"PRIx64

" pmi0=1 en0=0x%"PRIx64

" any0=%d pmi1=1 en1=0x%"PRIx64

" any1=%d pmi2=1 en2=0x%"PRIx64

" any2=%d] ",

829 pc[npc].reg_num,

832 !!(reg.

val & 0x4ULL),

833 (reg.

val>>4) & 0x3ULL,

834 !!((reg.

val>>4) & 0x4ULL),

835 (reg.

val>>8) & 0x3ULL,

836 !!((reg.

val>>8) & 0x4ULL));

837

838 if ((fixed_ctr & 0x1) == 0)

840 if ((fixed_ctr & 0x2) == 0)

842 if ((fixed_ctr & 0x4) == 0)

845

846 npc++;

847

848 if ((fixed_ctr & 0x1) == 0)

850 if ((fixed_ctr & 0x2) == 0)

852 if ((fixed_ctr & 0x4) == 0)

854 }

855

856

857

858

859 for (

i=0;

i < n ;

i++ ) {

860

862 continue;

863

865

866

868

871

873

874 umask = (val >> 8) & 0xff;

875

876 u_flags = 0;

877

878

879

880

881

882

888 }

889 val |= umask << 8;

890

896

901

902 if (cntrs) {

903

904

905

908

910

911

912

913

914 if (cntrs[

i].cnt_mask > 255)

916

918 }

919

926 }

927

929 pebs_mask |= 1ULL << assign_pc[

i];

930

931

932

933

934

935

936 if (reg.

sel_event == 0xb && (umask & 0x10))

937 ld_mask |= 1ULL << assign_pc[

i];

938

943

944 __pfm_vbprintf(

"[PERFEVTSEL%u(pmc%u)=0x%"PRIx64

" event_sel=0x%x umask=0x%x os=%d usr=%d anythr=%d en=%d int=%d inv=%d edge=%d cnt_mask=%d] %s\n",

945 pc[npc].reg_num,

946 pc[npc].reg_num,

959

961 pc[npc].reg_num,

962 pc[npc].reg_num);

963

964 npc++;

965 }

966

967

968

969 if (nuf) {

974 __pfm_vbprintf(

"[UNC_FIXED_CTRL(pmc20)=0x%"PRIx64

" pmi=1 ena=1] UNC_CLK_UNHALTED\n", pc[npc].reg_value);

976 unc_global_ctrl |= 1ULL<< 32;

977 npc++;

978 }

979

980

981

982 for (

i=0;

i < n ;

i++ ) {

983

984

985 if (!

is_uncore(assign_pc[

i]) || assign_pc[

i] == 20)

986 continue;

987

989

992

994

995 umask = (val >> 8) & 0xff;

996

1000 }

1001

1002 val |= umask << 8;

1003

1007

1008

1009

1010

1015

1016 if (cntrs) {

1017

1018

1019

1022

1024

1025

1026

1027

1028 if (cntrs[

i].cnt_mask > 255)

1030

1032 }

1035

1038

1041 }

1042

1043 unc_global_ctrl |= 1ULL<< (assign_pc[

i] - 21);

1048

1049 __pfm_vbprintf(

"[UNC_PERFEVTSEL%u(pmc%u)=0x%"PRIx64

" event=0x%x umask=0x%x en=%d int=%d inv=%d edge=%d occ=%d cnt_msk=%d] %s\n",

1050 pc[npc].reg_num - 21,

1051 pc[npc].reg_num,

1062

1064 pc[npc].reg_num - 21,

1065 pc[npc].reg_num);

1066 npc++;

1067 }

1068

1069

1070

1071

1072 for (

i=0;

i < n ;

i++) {

1073 switch (assign_pc[

i]) {

1074 case 0 ... 4:

1077

1079 break;

1080 case 16 ... 18:

1081

1085 break;

1086 case 20:

1090 break;

1091 case 21 ... 28:

1095 break;

1096 }

1097 }

1099

1100

1101

1102

1103 if (use_pebs && pebs_mask) {

1104 if (!lat)

1105 ld_mask = 0;

1106

1107

1108

1111

1113 pc[npc].

reg_value = pebs_mask | (ld_mask <<32);

1116

1117 __pfm_vbprintf(

"[PEBS_ENABLE(pmc%u)=0x%"PRIx64

" ena0=%d ena1=%d ena2=%d ena3=%d ll0=%d ll1=%d ll2=%d ll3=%d]\n",

1118 pc[npc].reg_num,

1119 pc[npc].reg_value,

1120 pc[npc].reg_value & 0x1,

1121 (pc[npc].reg_value >> 1) & 0x1,

1122 (pc[npc].reg_value >> 2) & 0x1,

1123 (pc[npc].reg_value >> 3) & 0x1,

1124 (pc[npc].reg_value >> 32) & 0x1,

1125 (pc[npc].reg_value >> 33) & 0x1,

1126 (pc[npc].reg_value >> 34) & 0x1,

1127 (pc[npc].reg_value >> 35) & 0x1);

1128

1129 npc++;

1130

1131 if (ld_mask) {

1132 if (lat < 3 || lat > 0xffff) {

1133 DPRINT(

"invalid load latency threshold %u (must be in [3:0xffff])\n", lat);

1135 }

1136

1139

1145 pc[npc].reg_num,

1146 pc[npc].reg_value);

1147

1148 npc++;

1149 }

1150 }

1151

1152

1153

1154

1155 if (offcore_rsp0_value) {

1161 pc[npc].reg_num,

1162 pc[npc].reg_value);

1163 npc++;

1164 }

1165

1166

1167

1168 if (offcore_rsp1_value) {

1174 pc[npc].reg_num,

1175 pc[npc].reg_value);

1176 npc++;

1177 }

1178

1180

1182}

#define PFMLIB_ERR_TOOMANY

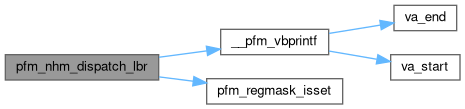

static int pfm_regmask_isset(pfmlib_regmask_t *h, unsigned int b)

#define PFMLIB_ERR_NOASSIGN

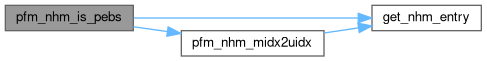

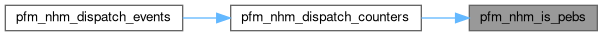

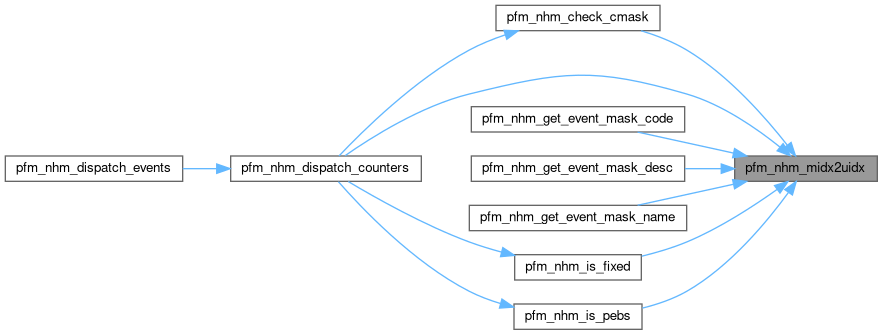

int pfm_nhm_is_pebs(pfmlib_event_t *e)

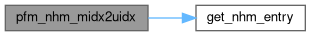

static pme_nhm_entry_t * get_nhm_entry(unsigned int i)

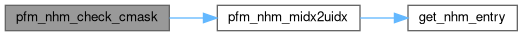

static int pfm_nhm_check_cmask(pfmlib_event_t *e, pme_nhm_entry_t *ne, pfmlib_nhm_counter_t *cntr)

static int pfm_nhm_is_fixed(pfmlib_event_t *e, unsigned int f)

#define UNC_NHM_FIXED_CTR_BASE

#define PFMLIB_NHM_ALL_FLAGS

#define NHM_FIXED_CTR_BASE

#define PFM_NHM_SEL_ANYTHR

#define PFM_NHM_SEL_OCC_RST

#define PMU_NHM_NUM_COUNTERS

#define PFMLIB_NHM_FIXED2_ONLY

#define PFMLIB_NHM_UNC_FIXED

#define PFMLIB_NHM_OFFCORE_RSP0

#define PFMLIB_NHM_OFFCORE_RSP1

#define PFMLIB_NHM_UMASK_NCOMBO

void __pfm_vbprintf(const char *fmt,...)

#define DPRINT(fmt, a...)

unsigned int ld_lat_thres

pfmlib_reg_t pfp_pmds[PFMLIB_MAX_PMDS]

pfmlib_reg_t pfp_pmcs[PFMLIB_MAX_PMCS]

unsigned int pfp_pmc_count

unsigned int pfp_pmd_count

unsigned long long reg_value

unsigned long reg_alt_addr

unsigned long long reg_addr

unsigned long sel_cnt_mask

unsigned long usel_cnt_mask