913{

914 SUBDBG(

"ENTER: ctx: %p, ctl: %p, events: %p, flags: %#x\n", ctx, ctl,

events, flags);

915

916 ( void ) flags;

918

919 (void) ctx;

922 long long tot_time_running, tot_time_enabled, scale;

923

924

926

927

928

929

931

933 sizeof ( papi_pe_buffer ) );

934 if ( ret == -1 ) {

936 SUBDBG(

"EXIT: PAPI_ESYS\n");

938 }

939

940

941 if (ret<(signed)(3*sizeof(long long))) {

943 SUBDBG(

"EXIT: PAPI_ESYS\n");

945 }

946

947 SUBDBG(

"read: fd: %2d, tid: %ld, cpu: %d, ret: %d\n",

950 SUBDBG(

"read: %lld %lld %lld\n",papi_pe_buffer[0],

951 papi_pe_buffer[1],papi_pe_buffer[2]);

952

953 tot_time_enabled = papi_pe_buffer[1];

954 tot_time_running = papi_pe_buffer[2];

955

956 SUBDBG(

"count[%d] = (papi_pe_buffer[%d] %lld * "

957 "tot_time_enabled %lld) / tot_time_running %lld\n",

958 i, 0,papi_pe_buffer[0],

959 tot_time_enabled,tot_time_running);

960

961 if (tot_time_running == tot_time_enabled) {

962

963 pe_ctl->

counts[

i] = papi_pe_buffer[0];

964 } else if (tot_time_running && tot_time_enabled) {

965

966

967

968 scale = (tot_time_enabled * 100LL) / tot_time_running;

969 scale = scale * papi_pe_buffer[0];

970 scale = scale / 100LL;

972 } else {

973

974 SUBDBG(

"perf_event kernel bug(?) count, enabled, "

975 "running: %lld, %lld, %lld\n",

976 papi_pe_buffer[0],tot_time_enabled,

977 tot_time_running);

978

979 pe_ctl->

counts[

i] = papi_pe_buffer[0];

980 }

981 }

982 }

983

984

986

987

989

991 sizeof ( papi_pe_buffer ) );

992 if ( ret == -1 ) {

994 SUBDBG(

"EXIT: PAPI_ESYS\n");

996 }

997

998

999 if (ret!=sizeof(long long)) {

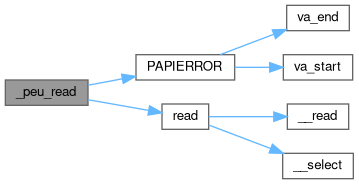

1001 PAPIERROR(

"read: fd: %2d, tid: %ld, cpu: %d, ret: %d\n",

1004 SUBDBG(

"EXIT: PAPI_ESYS\n");

1006 }

1007

1008 SUBDBG(

"read: fd: %2d, tid: %ld, cpu: %d, ret: %d\n",

1011 SUBDBG(

"read: %lld\n",papi_pe_buffer[0]);

1012

1013 pe_ctl->

counts[

i] = papi_pe_buffer[0];

1014 }

1015 }

1016

1017

1018

1019

1020

1021 else {

1023 PAPIERROR(

"Was expecting group leader!\n");

1024 }

1025

1027 sizeof ( papi_pe_buffer ) );

1028

1029 if ( ret == -1 ) {

1031 SUBDBG(

"EXIT: PAPI_ESYS\n");

1033 }

1034

1035

1036

1037 if (ret<(

signed)((1+pe_ctl->

num_events)*

sizeof(

long long))) {

1039 SUBDBG(

"EXIT: PAPI_ESYS\n");

1041 }

1042

1043 SUBDBG(

"read: fd: %2d, tid: %ld, cpu: %d, ret: %d\n",

1046 {

1047 int j;

1048 for(j=0;j<ret/8;j++) {

1049 SUBDBG(

"read %d: %lld\n",j,papi_pe_buffer[j]);

1050 }

1051 }

1052

1053

1055 PAPIERROR(

"Error! Wrong number of events!\n");

1056 SUBDBG(

"EXIT: PAPI_ESYS\n");

1058 }

1059

1060

1062 pe_ctl->

counts[

i] = papi_pe_buffer[1+

i];

1063 }

1064 }

1065

1066

1068

1069 SUBDBG(

"EXIT: PAPI_OK\n");

1071}

ssize_t read(int fd, void *buf, size_t count)

char events[MAX_EVENTS][BUFSIZ]

#define SUBDBG(format, args...)

void PAPIERROR(char *format,...)

long long counts[PERF_EVENT_MAX_MPX_COUNTERS]

pe_event_info_t events[PERF_EVENT_MAX_MPX_COUNTERS]