News and Announcements

Employment Opportunities at ICL

ICL is seeking full-time Research Scientists (MS or PhD) to participate in the design, development, and maintenance of numerical software libraries for solving linear algebra problems on large, distributed-memory machines with multi-core processors, hardware accelerators, and performance monitoring capabilities for new and advanced hardware and software technologies.

The prospective researcher will coauthor papers to document research findings, present the team’s work at conferences and workshops, and help lead students and other team members in their research endeavors in ongoing and future projects. Given the nature of the work, there will be opportunities for publication, travel, and high-profile professional networking and collaboration across academia, labs, and industry.

An MS or PhD in computer science, computational sciences, or math is preferred. Background in at least one of the following areas is also preferred: numerical linear algebra, HPC, performance monitoring, machine learning, or data analytics.

For more information check out ICL’s jobs page: http://www.icl.utk.edu/jobs.

Happy Birthday, Terry!

Terry Moore, ICL’s long-time Associate Director, celebrated his 70th birthday at the NSF Workshop on Smart Cyberinfrastructure in Crystal City, VA. Fortunately for Terry, Michela Taufer was also in attendance and not only remembered his birthday but also arranged a cake for the occasion.

Following his return, ICL admin arranged for Terry to celebrate his birthday again during Friday Lunch. Happy Birthday, Terry!

The Editor would like to thank Hidehiko Hasegawa and Jack Dongarra for their photo contributions.

Conference Reports

2020 ECP Annual Meeting

The 2020 Exascale Computing Project (ECP) Annual Meeting, held on February 3–7, brought us a second time to the hot and humid city of Houston, Texas. Like last year, the Royal Sonesta, located in Uptown Houston just a few feet away from Texas’s largest shopping mall, became home for more than a dozen ICLers.

After a day of industry partners presenting their plans for Exascale, the Director of DoE, Dr. Chris Fall, gave a keynote in which he expressed his excitement about the project but also indicated that people are thinking about what follows after the completion of ECP.

Other highlights included a session on “Understanding Performance with Exa-PAPI” organized by Heike Jagode and her team, a session on “Integrating PaRSEC-Enabled Libraries in Scientific Applications,” and a “Math Library Speed Dating Session.”

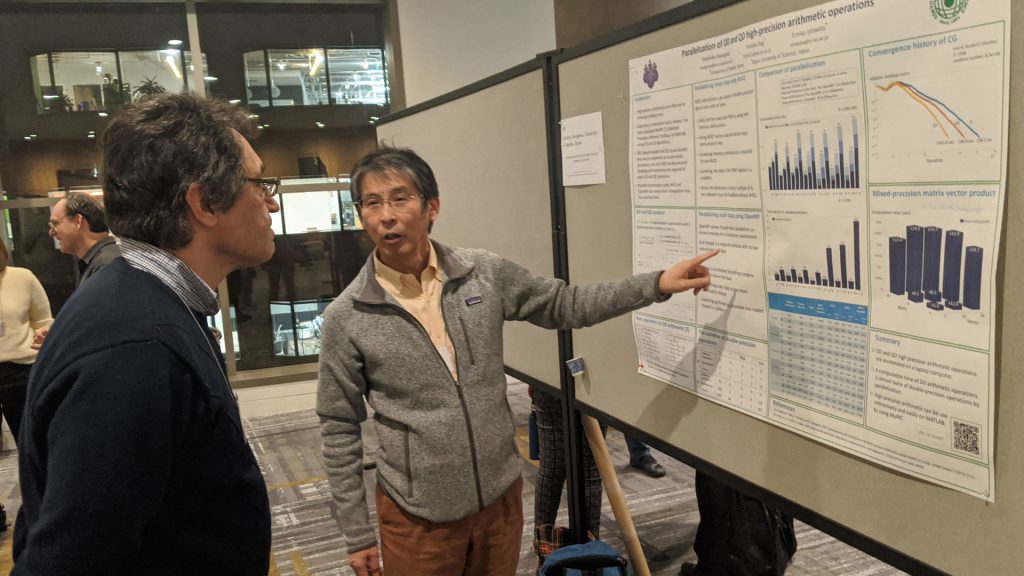

The sessions were complemented with poster presentations that were well attended and offered a nice environment for further discussion. The meeting was also a great place to catch up with ICL alum who still contribute to the success of ECP from other institutions. Familiar faces included Ichitaro Yamazaki (now working at Sandia National Labs) and Jakub Kurzak (now working for AMD).

With several years still to go in this ambitious endeavor, we anticipate seeing everyone again next year!

The Editor would like to thank Hartwig Anzt for generously providing the content above. The Editor also thanks Heike Jagode, George Bosilca, Earl Carr, and Piotr Luszczek for their photo contributions.

SIAM PP20

The 2020 SIAM Conference on Parallel Processing for Scientific Computing (SIAM PP20) was held in Seattle, Washington on February 12–15, 2020. SIAM is typically one of ICL’s most heavily attended conferences—second only to SC—and SIAM PP20 was in keeping with this tradition. There is a lot of ground to cover here, so let’s get rolling.

New workloads driven by machine learning and artificial intelligence applications—and their accelerated performance on new and emerging hardware—is pumping a lot of excitement into mixed and low-precision methods in linear algebra. As you might expect then, the “Advances in Algorithms Exploiting Low Precision Floating-Point Arithmetic” mini symposium was a fan favorite, where Ahmad Abdelfattah presented “Recent Half Precision Developments in the MAGMA Library,” to discuss new mixed-precision solvers in the MAGMA library, which are aimed at GPUs with native low-precision capabilities (e.g., NVIDIA’s Tensor Cores).

ICL alum Azzam Haidar was also in this session to discuss NVIDIA’s latest work in mixed precision. According to Azzam’s presentation, “Mixed Precision Numerical Techniques Accelerated with Tensor Cores and its Impact on Today’s Scientific Computing and Implications for Tomorrow’s Hardware Design,” NVIDIA is actively working on this problem by using their Tensor cores to accelerate common linear algebra routines at lower precisions while maintaining the accuracy of the solution.

Taking another angle on mixed precision, ICL Graduate Research Assistant Neil Lindquist looks at “Improving the Performance of GMRES with Mixed Precision.” Given the memory-bound nature of GMRES, Neil looks at storing vectors in single precision to lighten the burden on the memory and help alleviate some of this bottleneck for improved performance overall—all while maintaining the accuracy of the solution.

Moving on from the mixed-precision action, let’s talk about SLATE. Mark Gates was on hand to discuss “The Sustainability Lessons of the SLATE Project,” where he described the software engineering practices leveraged by the SLATE team to minimize the impact of rapidly changing technology and hardware and maximize SLATE’s sustainability in the long term.

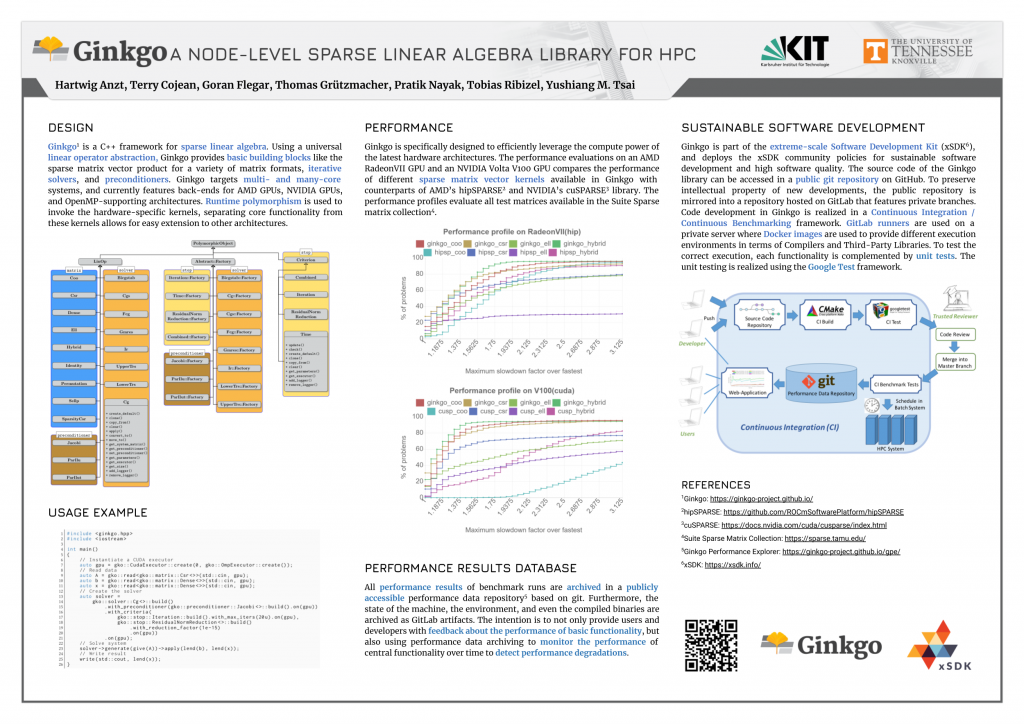

Hartwig presented his work on “Sparse Matrix Vector Product on High-End GPU Clusters,” where he compares his hybrid algorithm against vendor kernels for both AMD and NVIDIA (i.e., Ginkgo vs. HIP vs. CUDA). Hartwig also presented other developments in the Ginkgo project during the poster session.

Stan “the Man” Tomov brought out a new heFFTe poster to show off the latest developments in ICL’s ECP FFT effort. The heFFTe library (heFFTe 0.1 was released in October 2019) is the first software release from the ECP FFT project.

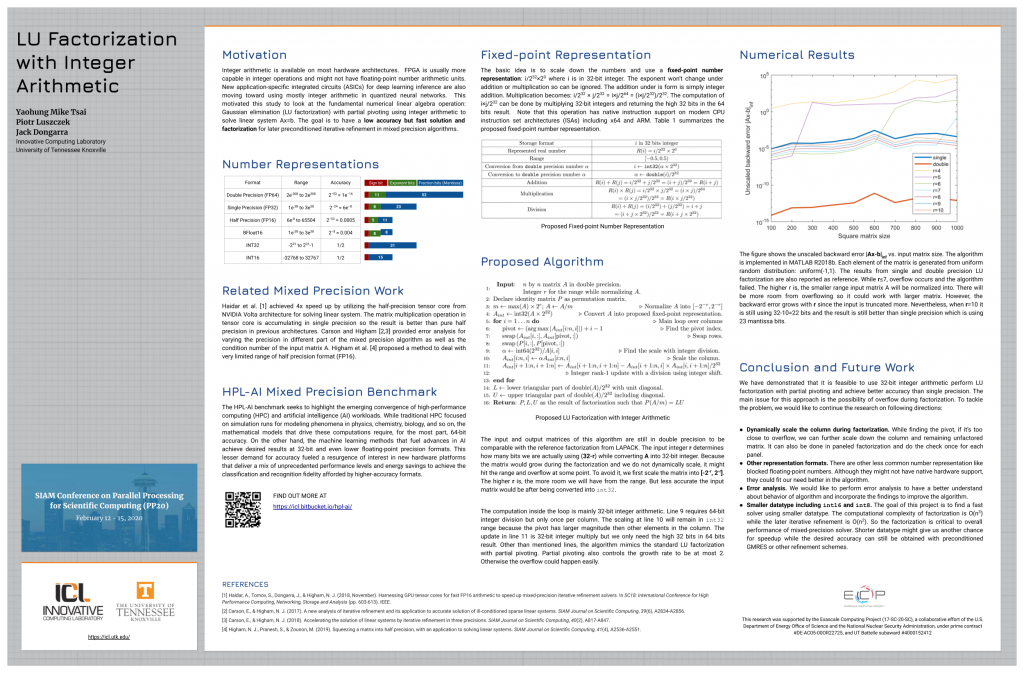

Mike Tsai also brought a poster to illustrate his work in “Using Quantized Integer in LU Factorization with Partial Pivoting,” where he showed ICL’s preliminary results for using quantization integers for LU factorization with partial pivoting.

Piotr Luszczek presented “Evaluation of Large Scale Systems with Focus on Application Performance: the Benchmarking Perspective,” where he described using results from four widely known benchmarks to inform both hardware and software development—earlier in the engineering phases—to ensure better performance and compatibility.

Moving on from all the mixed-precision and linear algebra hullabaloo, it’s time to DisCo. Yes, the Distributed Computing group was also on hand at SIAM PP20.

George Bosilca presented “New Non-Blocking Extensions to the ULFM Proposal,” with the key principle being that no MPI call (e.g., point-to-point, collective, RMA, I/O) can block indefinitely after a failure but must either succeed or raise an MPI error. ULFM and its extensions are currently under review by the MPI Forum’s Fault Tolerance Working Group.

Yu Pei presented his work on the “Evaluation of Programming Models to Address Load Imbalance on Distributed Multi-Core CPUs: A Case Study with Block Low-Rank Factorization.” Yu and his co authors used Block Low-Rank LU factorization as a test case to study the programmability and performance of five different programming approaches: (1) flat MPI, (2) Adaptive MPI, (3) MPI + OpenMP, (4) parameterized task graph, and (5) dynamic task discovery (DTD). These approaches were then analyzed on an Intel Haswell-based system to see the effectiveness of each implementation in addressing load imbalance.

Finally, Thomas Herault talked about “Novel Approaches to Optimize and Execute Task-Based, Irregular Applications on Extreme-Scale, Heterogeneous Systems using PaRSEC” as part of his group’s effort in the National Science Foundation–funded Epexa project.

Next year’s meeting will move out of the Pacific Northwest to Munich, Germany. Take pictures.

The Editor would like to thank Hidehiko Hasegawa, Mike Tsai, and Piotr Luszczek for their contributions to this article.

Interview

Yu Pei

Where are you from, originally?

I am originally from Guangzhou, a city in southern China.

Can you summarize your educational background?

I went to Sun-Yat Sen University in our province for biotechnology, at first, but then I switched and earned my BS in statistics instead. This was before the craze of machine learning and deep learning. Then I came to the United States to earn my MS in statistics at UC Davis. Through a sequence of events, I am now doing my PhD here at ICL!

Where did you work before joining ICL?

After my MS and before my PhD, I stayed at the UC Davis energy center as a junior researcher briefly, then I worked at ORNL for six months.

How did you first hear about the lab, and what made you want to work here?

I heard about the lab via my supervisor’s friend at ORNL. I have used LAPACK before, so when I saw ICL is one of the main developers of it I knew it would be a great place to pursue my PhD.

What is your focus here at ICL? What are you working on?

I am working in the DisCo group and specifically working in the PaRSEC project. I am exploring the optimization of several numerical algorithms on top of the runtime system. George also mentioned that I will have a focus on the programming language aspect, and I am excited for that!

What are your interests/hobbies outside of work?

I play some pick-up basketball at the gym, and I read all kinds of novels. I have a PS4, but it has been a while since I have had time to play.

Tell us something about yourself that might surprise people.

I was on my high school’s volleyball team, and when it was time for college, I had thought about going into athletics. Life is like a box of chocolates.

If you weren’t working at ICL, where would you like to be working and why?

Doing research is fun, so ideally some place research focused. Working in distributed computing and machine learning would be cool.