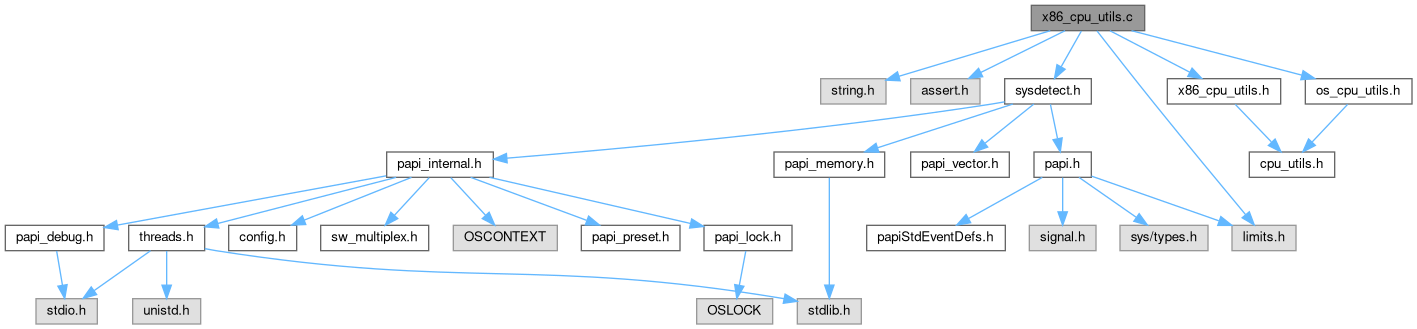

Go to the source code of this file.

Data Structures | |

| struct | apic_subid_mask_t |

| struct | apic_subid_t |

| struct | cpuid_reg_t |

Variables | |

| static _sysdetect_cache_level_info_t | clevel [PAPI_MAX_MEM_HIERARCHY_LEVELS] |

| static int | cpuid_has_leaf4 |

| static int | cpuid_has_leaf11 |

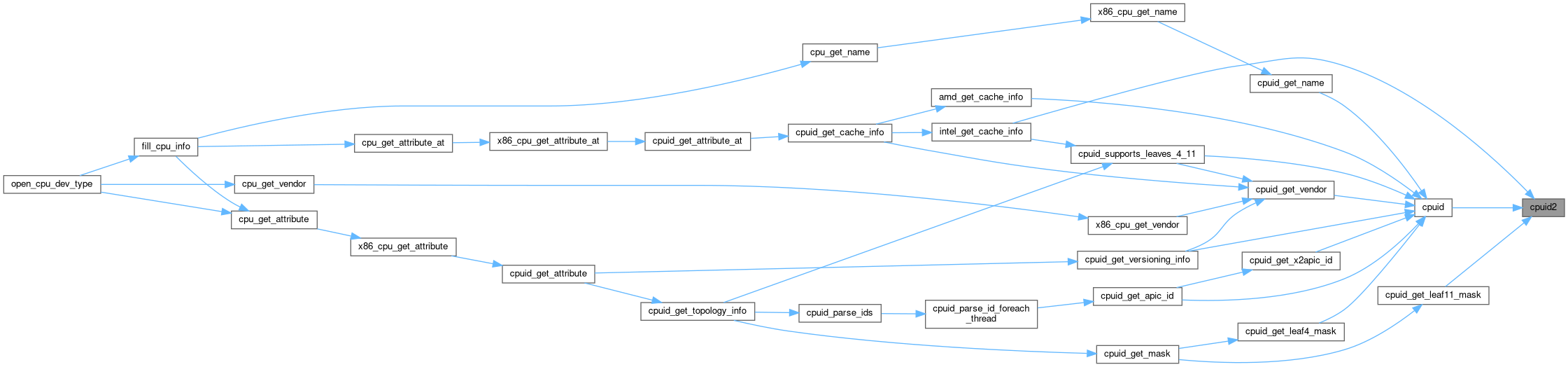

Function Documentation

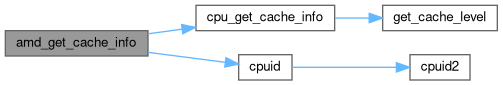

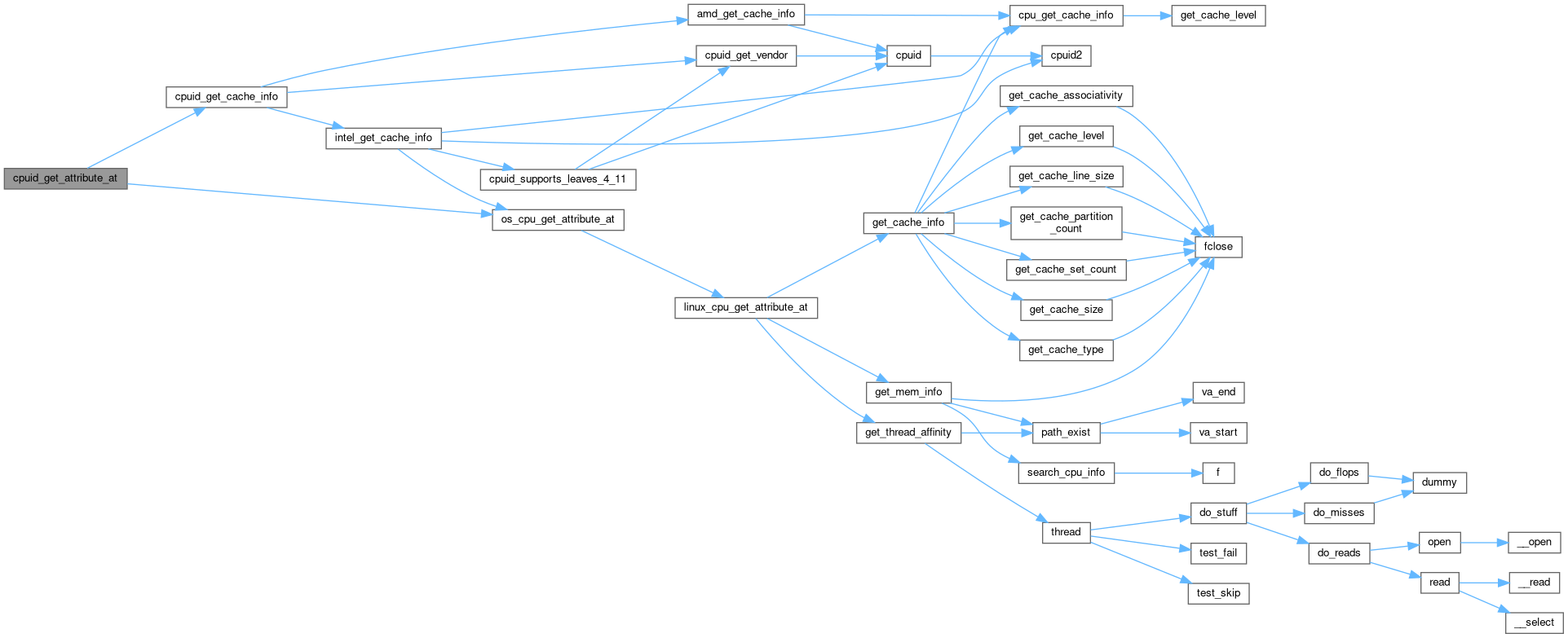

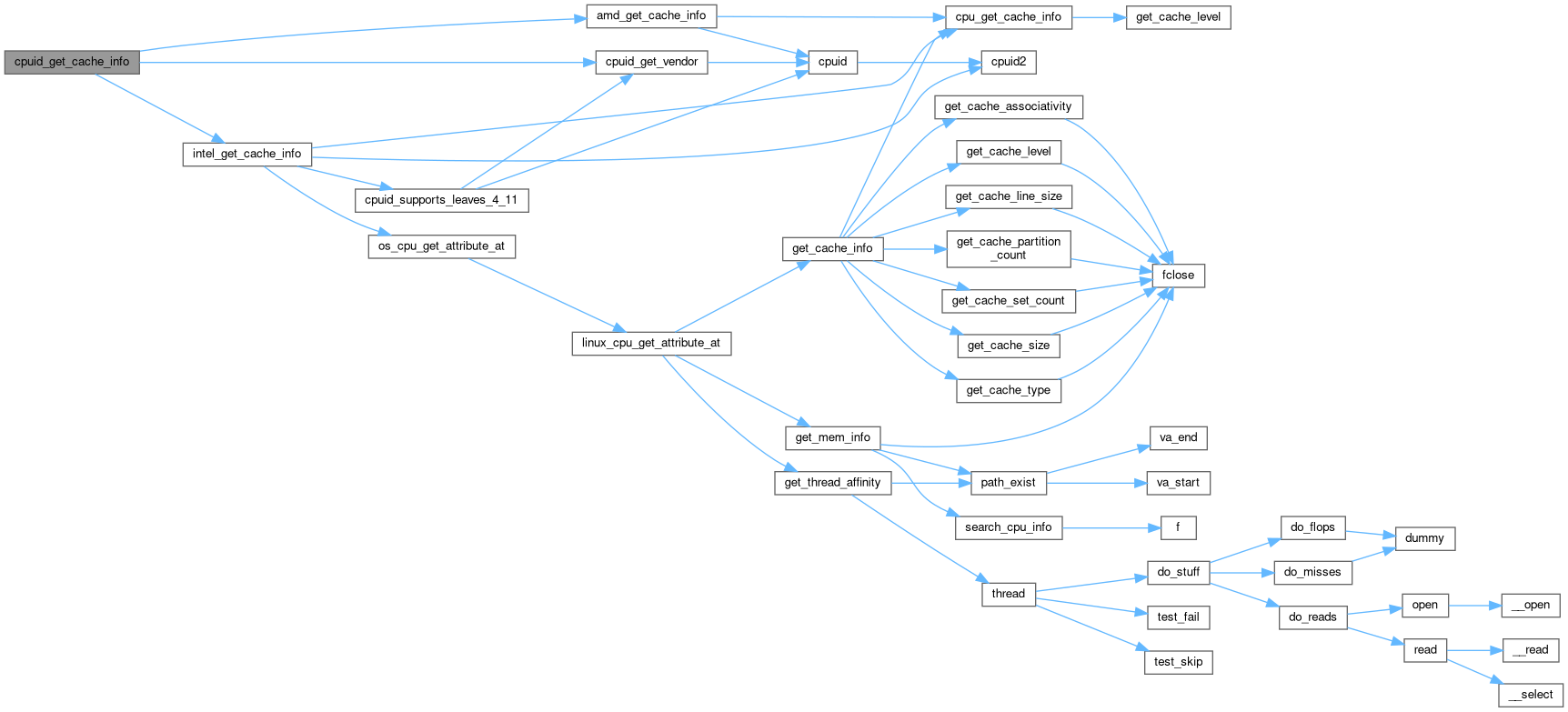

◆ amd_get_cache_info()

|

static |

Definition at line 381 of file x86_cpu_utils.c.

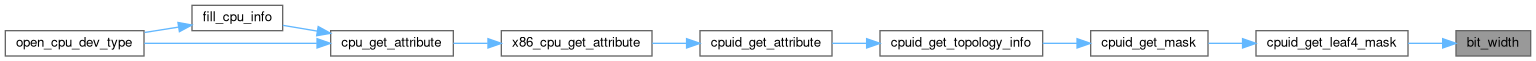

◆ bit_width()

Definition at line 736 of file x86_cpu_utils.c.

◆ cpuid()

|

static |

Definition at line 757 of file x86_cpu_utils.c.

◆ cpuid2()

|

static |

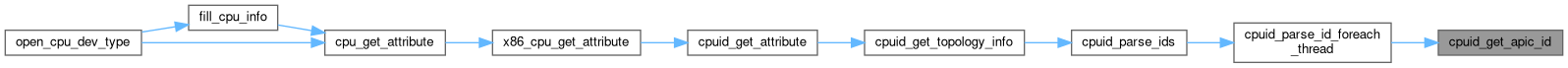

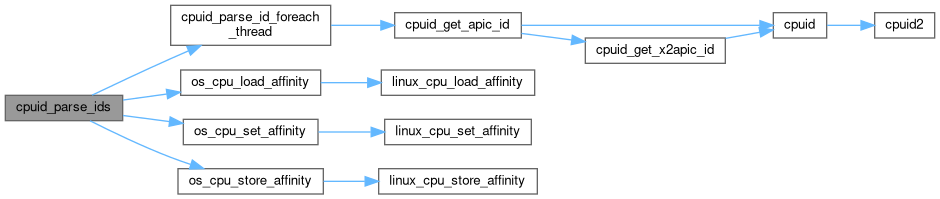

◆ cpuid_get_apic_id()

|

static |

Definition at line 724 of file x86_cpu_utils.c.

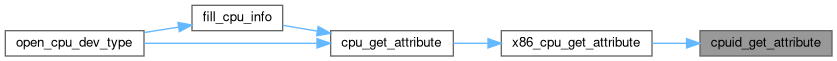

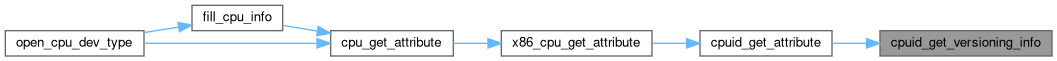

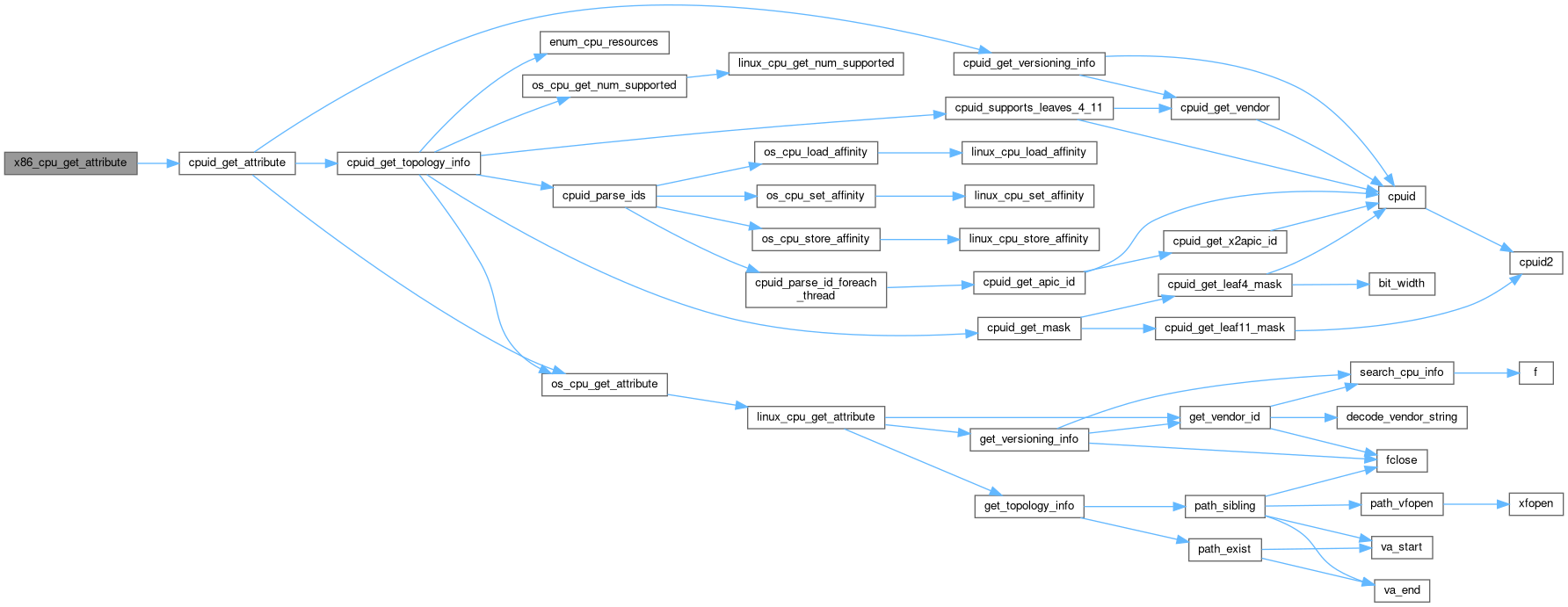

◆ cpuid_get_attribute()

|

static |

Definition at line 151 of file x86_cpu_utils.c.

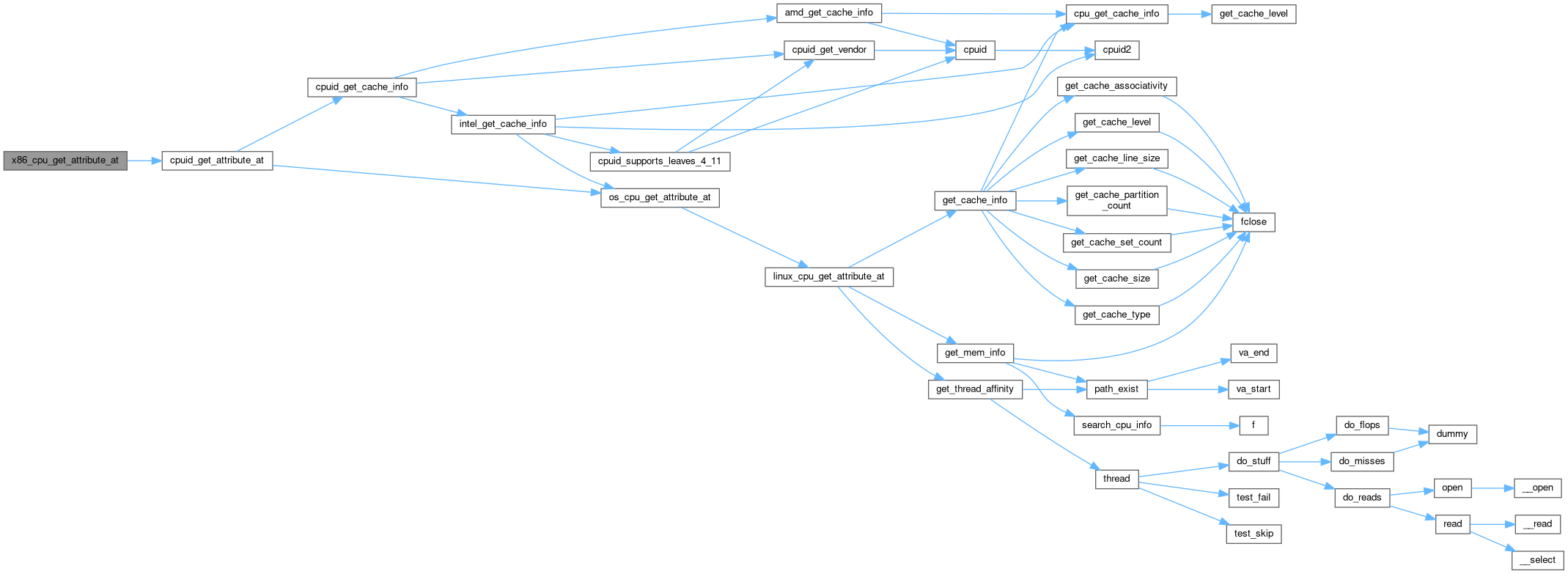

◆ cpuid_get_attribute_at()

|

static |

Definition at line 178 of file x86_cpu_utils.c.

◆ cpuid_get_cache_info()

|

static |

Definition at line 295 of file x86_cpu_utils.c.

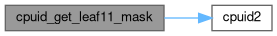

◆ cpuid_get_leaf11_mask()

|

static |

Definition at line 627 of file x86_cpu_utils.c.

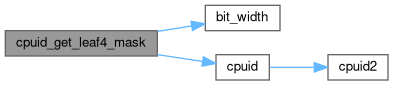

◆ cpuid_get_leaf4_mask()

|

static |

Definition at line 693 of file x86_cpu_utils.c.

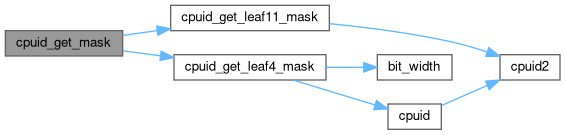

◆ cpuid_get_mask()

|

static |

Definition at line 617 of file x86_cpu_utils.c.

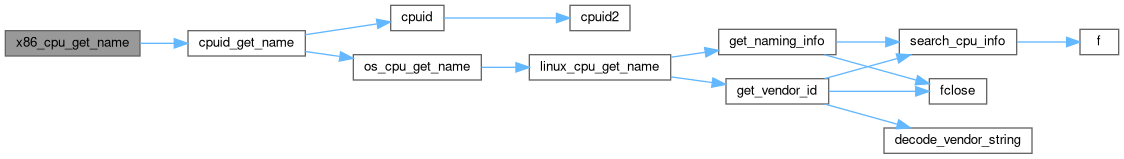

◆ cpuid_get_name()

|

static |

Definition at line 118 of file x86_cpu_utils.c.

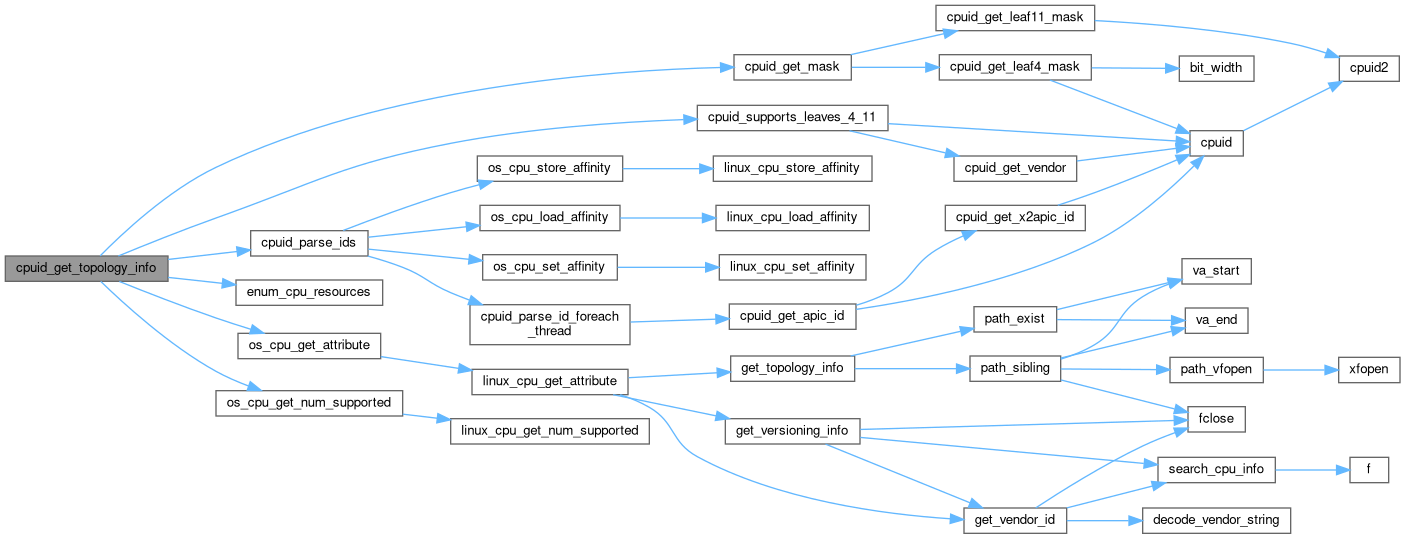

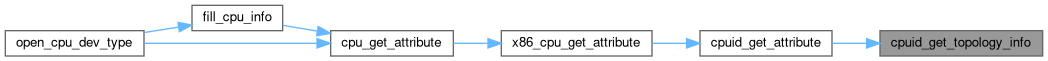

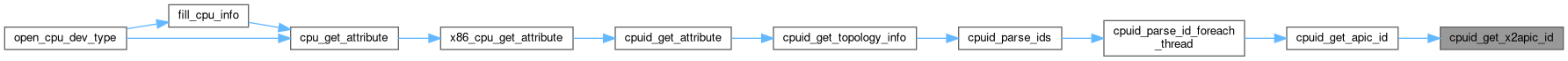

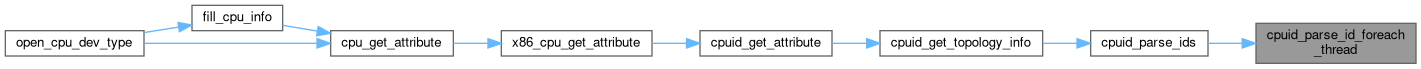

◆ cpuid_get_topology_info()

|

static |

Definition at line 212 of file x86_cpu_utils.c.

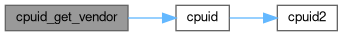

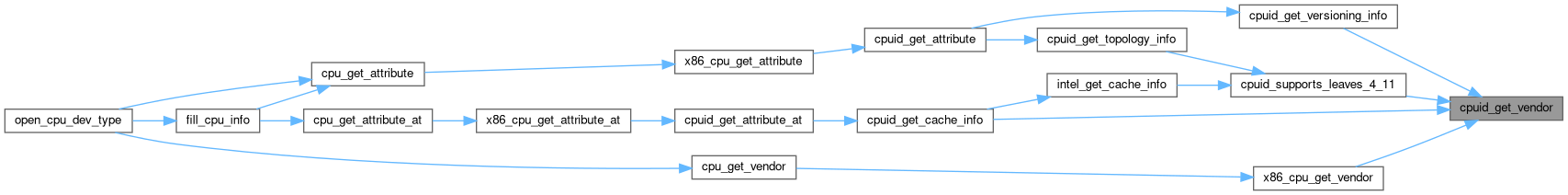

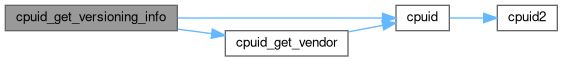

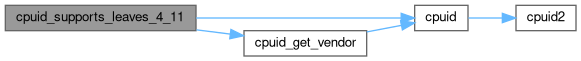

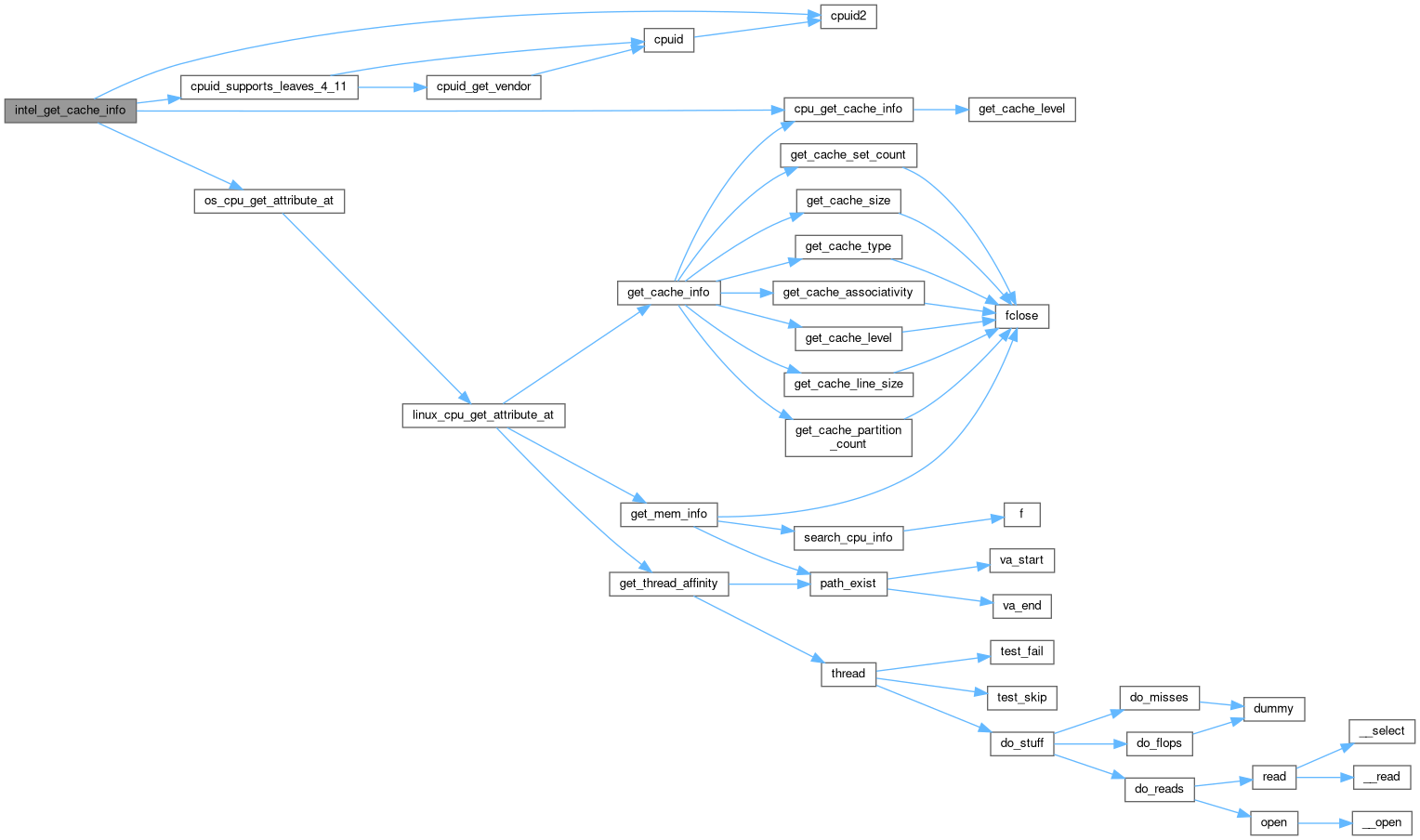

◆ cpuid_get_vendor()

|

static |

Definition at line 106 of file x86_cpu_utils.c.

◆ cpuid_get_versioning_info()

|

static |

Definition at line 532 of file x86_cpu_utils.c.

◆ cpuid_get_x2apic_id()

|

static |

Definition at line 716 of file x86_cpu_utils.c.

◆ cpuid_parse_id_foreach_thread()

|

static |

Definition at line 576 of file x86_cpu_utils.c.

◆ cpuid_parse_ids()

|

static |

Definition at line 588 of file x86_cpu_utils.c.

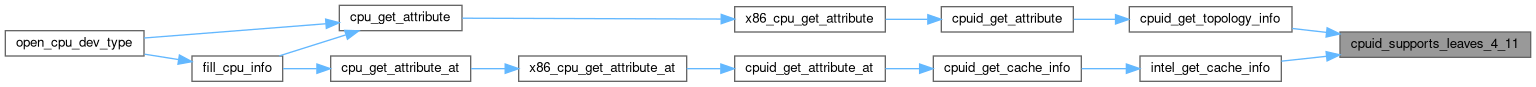

◆ cpuid_supports_leaves_4_11()

|

static |

Definition at line 449 of file x86_cpu_utils.c.

◆ enum_cpu_resources()

|

static |

Definition at line 470 of file x86_cpu_utils.c.

◆ intel_get_cache_info()

|

static |

Definition at line 314 of file x86_cpu_utils.c.

◆ x86_cpu_finalize()

| int x86_cpu_finalize | ( | void | ) |

Definition at line 76 of file x86_cpu_utils.c.

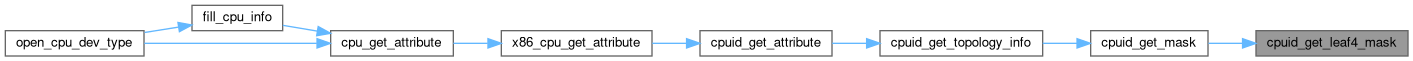

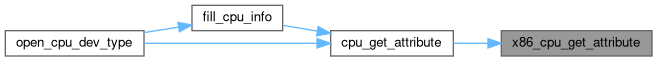

◆ x86_cpu_get_attribute()

| int x86_cpu_get_attribute | ( | CPU_attr_e | attr, |

| int * | value | ||

| ) |

Definition at line 94 of file x86_cpu_utils.c.

◆ x86_cpu_get_attribute_at()

| int x86_cpu_get_attribute_at | ( | CPU_attr_e | attr, |

| int | loc, | ||

| int * | value | ||

| ) |

Definition at line 100 of file x86_cpu_utils.c.

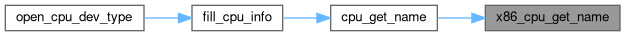

◆ x86_cpu_get_name()

| int x86_cpu_get_name | ( | char * | name | ) |

Definition at line 88 of file x86_cpu_utils.c.

◆ x86_cpu_get_vendor()

| int x86_cpu_get_vendor | ( | char * | vendor | ) |

Definition at line 82 of file x86_cpu_utils.c.

◆ x86_cpu_init()

| int x86_cpu_init | ( | void | ) |

Definition at line 65 of file x86_cpu_utils.c.

Variable Documentation

◆ clevel

|

static |

Definition at line 31 of file x86_cpu_utils.c.

◆ cpuid_has_leaf11

|

static |

Definition at line 62 of file x86_cpu_utils.c.

◆ cpuid_has_leaf4

|

static |

Definition at line 61 of file x86_cpu_utils.c.