News and Announcements

Top500 – U.S. Reclaims the Top Spot

In the 39th edition of the Top500, IBM’s Sequoia has beat Fujitsu’s K Computer to become the fastest supercomputer on the planet. The Sequoia, a BlueGene/Q powered behemoth, is housed at the Lawrence Livermore National Laboratory in Livermore, California, and achieved 16.32 petaflop/s on the LINPACK benchmark using 1,572,864 cores. Sequoia’s raw performance is staggering, yes, but the machine is also one of the most energy efficient systems on the Top500.

In the 39th edition of the Top500, IBM’s Sequoia has beat Fujitsu’s K Computer to become the fastest supercomputer on the planet. The Sequoia, a BlueGene/Q powered behemoth, is housed at the Lawrence Livermore National Laboratory in Livermore, California, and achieved 16.32 petaflop/s on the LINPACK benchmark using 1,572,864 cores. Sequoia’s raw performance is staggering, yes, but the machine is also one of the most energy efficient systems on the Top500.

The new #1 was not the only activity at the top of the list, as several machines were bumped in position, and 2 new systems made the top 10. Argonne National Laboratory’s Mira, another IBM BlueGene/Q machine in the U.S., debuted in 3rd place with 8.15 petaflop/s on the LINPACK benchmark using 786,432 cores. Italy has an IBM BlueGene/Q of their own, Fermi, which marks their first entry into the top 10 with 1.72 petaflop/s using 163,840 cores. In all, 4 of the top 10 supercomputers are IBM BlueGene/Q systems.

| Rank | Site | Computer |

|---|---|---|

| 1 | DOE/NNSA/LLNL United States |

Sequoia – BlueGene/Q, Power BQC 16C 1.60 GHz, Custom IBM |

| 2 | RIKEN Advanced Institute for Computational Science (AICS) Japan |

K computer, SPARC64 VIIIfx 2.0GHz, Tofu interconnect Fujitsu |

| 3 | DOE/SC/Argonne National Laboratory United States |

Mira – BlueGene/Q, Power BQC 16C 1.60GHz, Custom IBM |

| 4 | Leibniz Rechenzentrum Germany |

SuperMUC – iDataPlex DX360M4, Xeon E5-2680 8C 2.70GHz, Infiniband FDR IBM |

| 5 | National Supercomputing Center in Tianjin China |

Tianhe-1A – NUDT YH MPP, Xeon X5670 6C 2.93 GHz, NVIDIA 2050 NUDT |

| See the full list at Top500.org. | ||

Hear Rich Brueckner and Jack Dongarra discuss the new TOP500 list by listening to the following podcast from InsideHPC:

[audio:http://icl.cs.utk.edu/newsletter/files/2012-07/audio/DongarraPodcast.mp3]In this podcast, Jack Dongarra from the University of Tennessee discusses the June 2012 TOP500 list of the world’s most powerful supercomputers. The TOP500 is celebrating its 20th anniversary this year and Jack tells us more about how it got started and how the evolution of supercomputer performance measurement continues to evolve.

ICL Retreat 2012

As a reminder, mark your calendars for August 16-17 for the 2012 ICL Retreat! This year, we return to the Buckberry Lodge in Gatlinburg.

As a reminder, mark your calendars for August 16-17 for the 2012 ICL Retreat! This year, we return to the Buckberry Lodge in Gatlinburg.

Shirley Moore

Shirley Moore, who has worked for ICL since January 1993, accepted a faculty position at the University of Texas at El Paso (UTEP) for the fall of 2012. She will be an Associate Professor of Computational and Computer Science (split position between the graduate Computational Science Program and the Computer Science Department). Her research at UTEP will focus on using code restructuring and performance tools to enhance the performance, maintainability, and evolvability of parallel scientific applications.

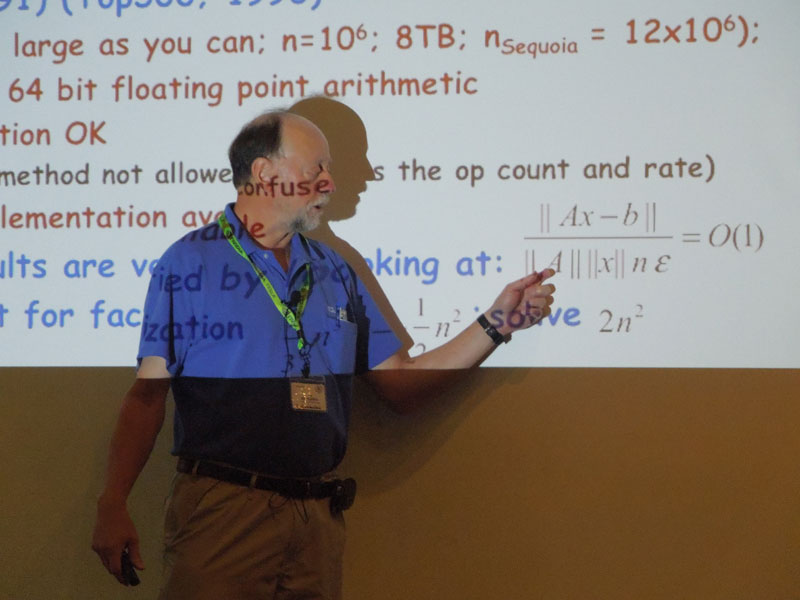

LINPACK is Still Going Strong

In a recent HPCwire interview, Tom Tabor had an in-depth discussion with Hans Meuer about the impact of the Top500 list over the span of its nearly 20 year existence. Hans explained how the list is compiled based on the best LINPACK performance, and how Jack Dongarra—dubbed the “father of LINPACK” by Meuer—joined the effort in 1993.

The use of LINPACK to rank every machine on the Top500 is significant, especially considering the list is going on 20 years old and has used basically the same benchmark in an application where the machines have become significantly larger and more powerful. A bit of what Meuer had to say about LINPACK in the Top500:

We have been criticized for choosing LINPACK from the very beginning, but now in the 20th year, I believe that it was this particular choice that has contributed to the success of TOP500. Back then and also now, there simply isn’t an appropriate alternative to LINPACK. Any other benchmark would appear similarly specific, but would not be so readily available for all systems in question. One of LINPACK’s advantages is its scalability, in the sense that it has allowed us for the past 19 years to benchmark systems that cover a performance range of more than 11 orders of magnitude.

To read more about the LINPACK benchmark and the role of the Top500 in HPC, check out the HPCwire interview in its entirety, here.

Conference Reports

International Conference on Computational Science

This year’s International Conference on Computational Science (ICCS) was held in Omaha, Nebraska on June 4-6, and was the twelfth meeting of the ICCS. The theme for this year’s meeting was “Empowering Science through Computing,” in order to emphasize the growing importance of computational science theory and practice.

Several ICLers attended the meeting, including Jack Dongarra, Peng Du, Ichitaro Yamazaki, and Mark Gates. Jack moderated a session of GPU related papers in which Mark presented work on block-asynchronous multigrid smoothers—a team effort with Hartwig and Stan. Ichi also presented Yulu’s mutli-core, multi-GPU paper and some work on multi-core LU and Cholesky factorizations. In a separate session, Peng presented a paper on fault tolerant LU. ICL alumnus Marc Baboulin also presented a paper on the random butterfly applied to LU, a paper that was co-authored by Jack, Simplice, and Stan.

International Supercomputing Conference

The 2012 International Supercomputing Conference (ISC’12), held on June 17-21 at the Congress Center in Hamburg, Germany, drew a record 2,403 attendees and 175 of the world’s leading high performance computing vendors and research organizations.

During the proceedings, the University of Tennessee’s Jack Dongarra was named an ISC Fellow along with Thomas Ludwig of the German Climate Computing Center. Jack also gave a talk highlighting the latest accomplishments of the MAGMA project during the Application Performance session, and presented Top500 awards during the conference’s opening session.

Jakub Kurzak, Hatem Ltaief, and Jack Dongarra also gave a tutorial on Dense Linear Algebra Libraries for High Performance Computing, including a retrospective of LINPACK, LAPACK, and ScaLAPACK, a look at developments in PLASMA and MAGMA, and the ongoing efforts in linear algebra software for distributed memory machines (DAGuE/DPLASMA).

Recent Releases

MAGMA 1.2.1 Released

MAGMA 1.2.1 is now available for download. This release includes the following updates:

- Removed dependency on CUDA device driver.

- Added magma_malloc_cpu to allocate CPU memory aligned to 32-byte boundary for performance and reproducibility.

- Added QR with pivoting in CPU interface (functions [zcsd]geqp3).

- Added hegst/sygst Fortran interface.

- Improved performance of gesv CPU interface by 30%.

- Improved performance of ungqr/orgqr CPU and GPU interfaces by 30%; more for small matrices.

- Numerous bug fixes.

Visit the MAGMA website to download the tarball.

clMAGMA 0.3 Released

clMAGMA 0.3 is now available. This release provides OpenCL support for the main two-sided matrix factorizations, the basis for eigenproblem and SVD solvers. clMAGMA 0.3 includes the following new functionalities:

- Reduction to upper Hessenberg form by similarity orthogonal transformations (routines magma_{z|c|d|s}gehrd ).

- Reduction to upper/lower bidiagonal form by similarity orthogonal transformations (routines magma_{z|c|d|s}gebrd ).

- Reduction to tridiagonal form by similarity orthogonal transformations ( routines magma_{zhe|che|dsy|ssy}trd ).

Visit the MAGMA website to download the tarball.

Interview

Wesley Bland

Where are you from, originally?

I was born in Knoxville and have spent most of my life here.

Can you summarize your educational background?

I did my undergrad at Tennessee Tech in Cookeville, TN. While I was there, I spent my summers at ORNL working internships with two different groups. I came to UTK in 2007 and received my M.S. in 2009. I’m still here and still working on my Ph.D. which I’m targeting for next year.

Tell us how you first learned about ICL.

I learned about the ICL when I came to grad school at UTK. I spent my first year here as a graduate teaching assistant for CS 100. Wes Alvaro was in one of my classes and talked about the ICL, so I came to one of the recruiting lunches. That summer I talked to George and his team, and joined the ICL in the fall.

What made you want to work for ICL?

The ICL seemed like the group at UTK that was doing work most closely related to my previous research. I was interested in joining a group where I would be able to keep working on HPC problems while pursuing my Ph.D. I ended up doing something completely different than my undergraduate work, but it’s been great to learn new areas of computer science.

What are you working on while at ICL?

I work in the distributed computing group on MPI-level fault tolerance. Most recently, I’ve been working with George, Thomas, and Aurelien on an effort to standardize and implement fault tolerance in the MPI forum. I’m also working with teams from the University of Georgia and Vanderbilt University to bring FT techniques to their applications.

What do you plan on doing after graduation?

My goal is to eventually get back into a university as a professor. I’ve always enjoyed teaching and want to continue doing that. In the meantime, I’d like to try something a little different than academic research. I’d like to try out a job in industry for a while.

What are your interests/hobbies outside of work?

I enjoy all the usual nerd things: books, video games, side programming projects, science fiction. I also coach a basketball team at my church as well as participating in various musical groups. I follow Manchester United whenever possible, and I play with my dog Ada (named after who you think she’s named after) with my wife Julie.

Tell us something about yourself that might surprise people.

I started programming when I was 8. The library at school had a book called “Learn to make your own computer games” which had a bunch of simple BASIC programs like Guess a Number and Tic-Tac-Toe. I checked it out and compiled them all on our old computer at home. Between that and the fact that both of my parents were computer scientists, I guess there wasn’t much choice about what I was going to end up doing.