Loading...

Searching...

No Matches

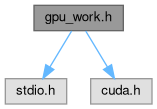

Include dependency graph for gpu_work.h:

Go to the source code of this file.

Macros | |

| #define | _GW_CALL(call) |

Functions | |

| __global__ void | VecAdd (const int *A, const int *B, int *C, int N) |

| __global__ void | VecSub (const int *A, const int *B, int *C, int N) |

| static void | initVec (int *vec, int n) |

| static void | cleanUp (int *h_A, int *h_B, int *h_C, int *h_D, int *d_A, int *d_B, int *d_C, int *d_D) |

| static void | VectorAddSubtract (int N, int quiet) |

Macro Definition Documentation

◆ _GW_CALL

| #define _GW_CALL | ( | call | ) |

Value:

do { \

cudaError_t _status = (call); \

if (_status != cudaSuccess) { \

} \

} while (0);

FILE * stderr

Definition at line 4 of file gpu_work.h.

Function Documentation

◆ cleanUp()

|

static |

Definition at line 34 of file gpu_work.h.

35{

36 if (d_A)

37 _GW_CALL(cudaFree(d_A));

38 if (d_B)

39 _GW_CALL(cudaFree(d_B));

40 if (d_C)

41 _GW_CALL(cudaFree(d_C));

42 if (d_D)

43 _GW_CALL(cudaFree(d_D));

44

45 // Free host memory

46 if (h_A)

47 free(h_A);

48 if (h_B)

49 free(h_B);

50 if (h_C)

51 free(h_C);

52 if (h_D)

53 free(h_D);

54}

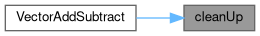

Here is the caller graph for this function:

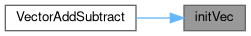

◆ initVec()

◆ VecAdd()

Definition at line 13 of file gpu_work.h.

◆ VecSub()

◆ VectorAddSubtract()

Definition at line 56 of file gpu_work.h.

57{

60 int threadsPerBlock = 0;

61 int blocksPerGrid = 0;

62 int *h_A, *h_B, *h_C, *h_D;

63 int *d_A, *d_B, *d_C, *d_D;

65 int device;

66 cudaGetDevice(&device);

67 // Allocate input vectors h_A and h_B in host memory

68 h_A = (int*)malloc(size);

69 h_B = (int*)malloc(size);

70 h_C = (int*)malloc(size);

71 h_D = (int*)malloc(size);

72 if (h_A == NULL || h_B == NULL || h_C == NULL || h_D == NULL) {

74 }

75

76 // Initialize input vectors

79 memset(h_C, 0, size);

80 memset(h_D, 0, size);

81

82 // Allocate vectors in device memory

87

88 // Copy vectors from host memory to device memory

89 _GW_CALL(cudaMemcpy(d_A, h_A, size, cudaMemcpyHostToDevice));

90 _GW_CALL(cudaMemcpy(d_B, h_B, size, cudaMemcpyHostToDevice));

91

92 // Invoke kernel

93 threadsPerBlock = 256;

94 blocksPerGrid = (N + threadsPerBlock - 1) / threadsPerBlock;

96 device, blocksPerGrid, threadsPerBlock);

97

98 VecAdd<<<blocksPerGrid, threadsPerBlock>>>(d_A, d_B, d_C, N);

99

100 VecSub<<<blocksPerGrid, threadsPerBlock>>>(d_A, d_B, d_D, N);

101

102 // Copy result from device memory to host memory

103 // h_C contains the result in host memory

104 _GW_CALL(cudaMemcpy(h_C, d_C, size, cudaMemcpyDeviceToHost));

105 _GW_CALL(cudaMemcpy(h_D, d_D, size, cudaMemcpyDeviceToHost));

106 if (!quiet) fprintf(stderr, "Kernel launch complete and mem copied back from device %d\n", device);

107 // Verify result

113 exit(-1);

114 }

115 }

116

117 cleanUp(h_A, h_B, h_C, h_D, d_A, d_B, d_C, d_D);

118}

static void cleanUp(int *h_A, int *h_B, int *h_C, int *h_D, int *d_A, int *d_B, int *d_C, int *d_D)

Definition: gpu_work.h:34

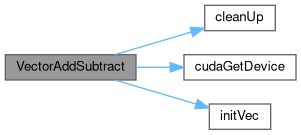

Here is the call graph for this function: