Loading...

Searching...

No Matches

libpfms.c File Reference

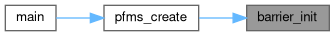

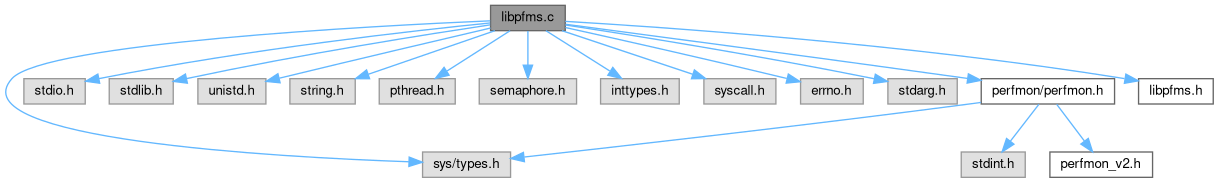

Include dependency graph for libpfms.c:

Go to the source code of this file.

Data Structures | |

| struct | barrier_t |

| struct | pfms_cpu_t |

| struct | pfms_thread_t |

| struct | pfms_session_t |

Macros | |

| #define | dprint(format, arg...) |

Enumerations | |

| enum | pfms_cmd_t { CMD_NONE , CMD_CTX , CMD_LOAD , CMD_UNLOAD , CMD_WPMCS , CMD_WPMDS , CMD_RPMDS , CMD_STOP , CMD_START , CMD_CLOSE } |

Functions | |

| static int | barrier_init (barrier_t *b, uint32_t count) |

| static void | cleanup_barrier (void *arg) |

| static int | barrier_wait (barrier_t *b) |

| static int | pin_cpu (uint32_t cpu) |

| static void | pfms_thread_mainloop (void *arg) |

| static int | create_one_wthread (int cpu) |

| static int | create_wthreads (uint64_t *cpu_list, uint32_t n) |

| int | pfms_initialize (void) |

| int | pfms_create (uint64_t *cpu_list, size_t n, pfarg_ctx_t *ctx, pfms_ovfl_t *ovfl, void **desc) |

| int | pfms_load (void *desc) |

| static int | __pfms_do_simple_cmd (pfms_cmd_t cmd, void *desc, void *data, uint32_t n) |

| int | pfms_unload (void *desc) |

| int | pfms_start (void *desc) |

| int | pfms_stop (void *desc) |

| int | pfms_write_pmcs (void *desc, pfarg_pmc_t *pmcs, uint32_t n) |

| int | pfms_write_pmds (void *desc, pfarg_pmd_t *pmds, uint32_t n) |

| int | pfms_close (void *desc) |

| int | pfms_read_pmds (void *desc, pfarg_pmd_t *pmds, uint32_t n) |

Variables | |

| static uint32_t | ncpus |

| static pfms_thread_t * | tds |

| static pthread_mutex_t | tds_lock = PTHREAD_MUTEX_INITIALIZER |

Macro Definition Documentation

◆ dprint

Enumeration Type Documentation

◆ pfms_cmd_t

| enum pfms_cmd_t |

Function Documentation

◆ __pfms_do_simple_cmd()

|

static |

Definition at line 528 of file libpfms.c.

529{

530 size_t k;

532 int ret;

533

534 if (desc == NULL) {

536 return -1;

537 }

539

542 return -1;

543 }

544 /*

545 * send create context order

546 */

552 sem_post(&tds[k].cmd_sem);

553 }

554 }

556

557 ret = 0;

558

559 /*

560 * check for errors

561 */

565 if (ret) {

567 break;

568 }

569 }

570 }

571 /*

572 * simple commands cannot be undone

573 */

574 return ret ? -1 : 0;

575}

Definition: libpfms.c:58

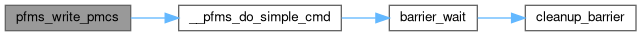

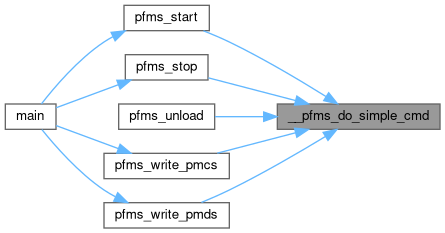

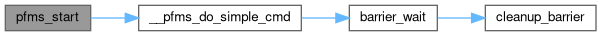

Here is the call graph for this function:

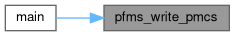

Here is the caller graph for this function:

◆ barrier_init()

◆ barrier_wait()

Definition at line 95 of file libpfms.c.

96{

97 uint64_t generation;

98 int oldstate;

99

101

102 pthread_mutex_lock(&b->mutex);

103

104 pthread_testcancel();

105

107

108 /* reset barrier */

110 /*

111 * bump generation number, this avoids thread getting stuck in the

112 * wake up loop below in case a thread just out of the barrier goes

113 * back in right away before all the thread from the previous "round"

114 * have "escaped".

115 */

116 b->generation++;

117

118 pthread_cond_broadcast(&b->cond);

119 } else {

120

121 generation = b->generation;

122

123 pthread_setcancelstate(PTHREAD_CANCEL_ENABLE, &oldstate);

124

127 }

128

129 pthread_setcancelstate(oldstate, NULL);

130 }

131 pthread_mutex_unlock(&b->mutex);

132

133 pthread_cleanup_pop(0);

134

135 return 0;

136}

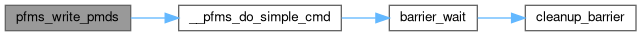

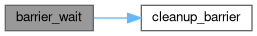

Here is the call graph for this function:

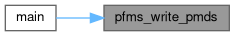

Here is the caller graph for this function:

◆ cleanup_barrier()

|

static |

◆ create_one_wthread()

◆ create_wthreads()

|

static |

Definition at line 277 of file libpfms.c.

278{

279 uint64_t v;

280 uint32_t i,k, cpu;

281 int ret = 0;

282

283 for(k=0, cpu = 0; k < n; k++, cpu+= 64) {

284 v = cpu_list[k];

287 ret = create_one_wthread(cpu);

288 if (ret) break;

289 }

290 }

291 }

292

293 if (ret)

295

296 return ret;

297}

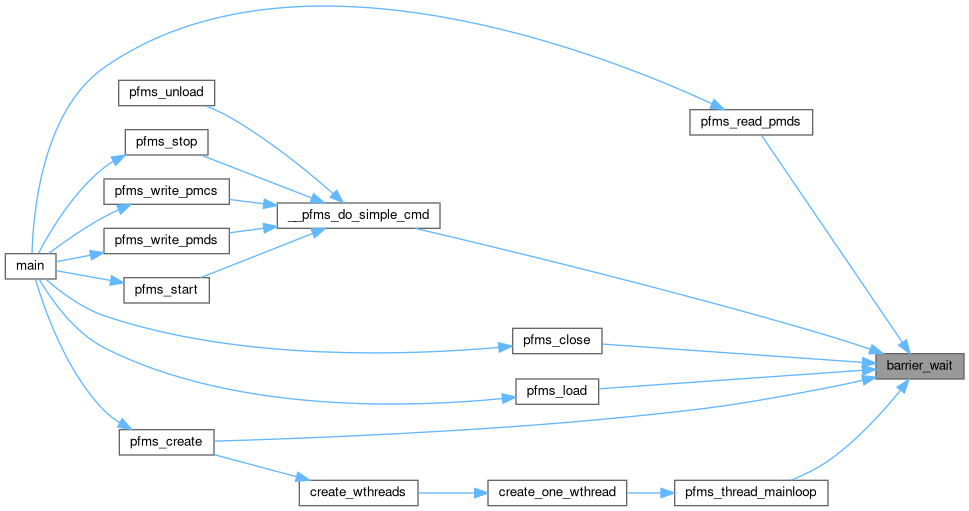

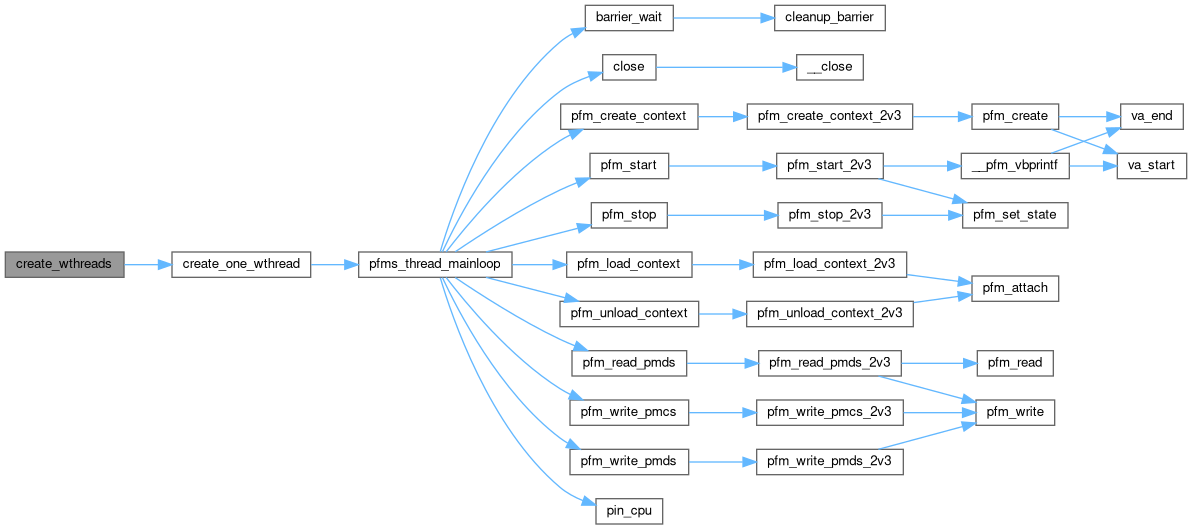

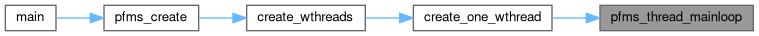

Here is the call graph for this function:

Here is the caller graph for this function:

◆ pfms_close()

| int pfms_close | ( | void * | desc | ) |

Definition at line 608 of file libpfms.c.

609{

610 size_t k;

612 int ret;

613

614 if (desc == NULL) {

616 return -1;

617 }

619

622 return -1;

623 }

624

628 sem_post(&tds[k].cmd_sem);

629 }

630 }

632

633 ret = 0;

634

635 pthread_mutex_lock(&tds_lock);

636 /*

637 * check for errors

638 */

643 }

646 }

647 }

648

649 pthread_mutex_unlock(&tds_lock);

650

651 free(s);

652

653 /*

654 * XXX: we cannot undo close

655 */

656 return ret ? -1 : 0;

657}

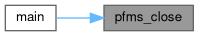

Here is the call graph for this function:

Here is the caller graph for this function:

◆ pfms_create()

| int pfms_create | ( | uint64_t * | cpu_list, |

| size_t | n, | ||

| pfarg_ctx_t * | ctx, | ||

| pfms_ovfl_t * | ovfl, | ||

| void ** | desc | ||

| ) |

Definition at line 327 of file libpfms.c.

328{

329 uint64_t v;

331 uint32_t num, cpu;

333 int ret;

334

335 if (cpu_list == NULL || n == 0 || ctx == NULL || desc == NULL) {

337 return -1;

338 }

339

342 return -1;

343 }

344

345 *desc = NULL;

346

347 /*

348 * XXX: assuming CPU are contiguously indexed

349 */

350 num = 0;

351 for(k=0, cpu = 0; k < n; k++, cpu+=64) {

352 v = cpu_list[k];

354 if (v & 0x1) {

357 return -1;

358 }

359 num++;

360 }

361 }

362 }

363

364 if (num == 0)

365 return 0;

366

370 return -1;

371 }

372 s->ncpus = num;

373

374 printf("%u-way session\n", num);

375

376 /*

377 * +1 to account for main thread waiting

378 */

380 if (ret) {

382 goto error_free;

383 }

384

385 /*

386 * lock thread descriptor table, no other create_session, close_session

387 * can occur

388 */

389 pthread_mutex_lock(&tds_lock);

390

392 goto error_free_unlock;

393

394 /*

395 * check all needed threads are available

396 */

397 for(k=0, cpu = 0; k < n; k++, cpu += 64) {

398 v = cpu_list[k];

400 if (v & 0x1) {

403 goto error_free_unlock;

404 }

405

406 }

407 }

408 }

409

410 /*

411 * send create context order

412 */

413 for(k=0, cpu = 0; k < n; k++, cpu += 64) {

414 v = cpu_list[k];

416 if (v & 0x1) {

420 sem_post(&tds[cpu].cmd_sem);

421 }

422 }

423 }

425

426 ret = 0;

427

428 /*

429 * check for errors

430 */

434 if (ret)

435 break;

436 }

437 }

438 /*

439 * undo if error found

440 */

446 sem_post(&tds[k].cmd_sem);

447 }

448 /* mark as free */

450 }

451 }

452 }

453 pthread_mutex_unlock(&tds_lock);

454

456

457 return ret ? -1 : 0;

458

459error_free_unlock:

460 pthread_mutex_unlock(&tds_lock);

461

462error_free:

463 free(s);

464 return -1;

465}

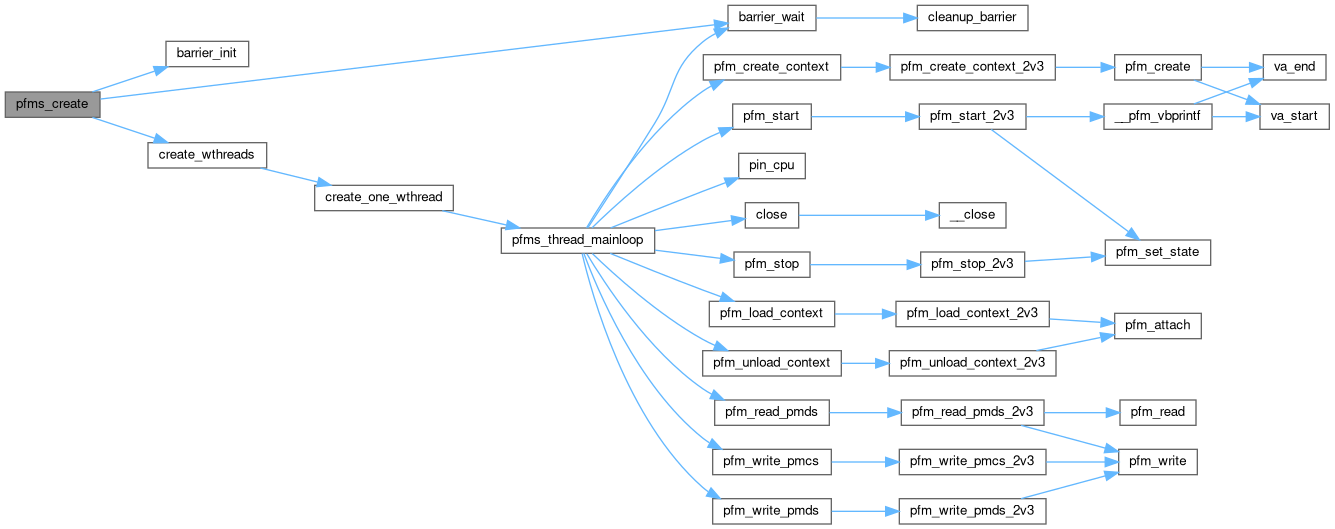

Here is the call graph for this function:

Here is the caller graph for this function:

◆ pfms_initialize()

| int pfms_initialize | ( | void | ) |

Definition at line 300 of file libpfms.c.

301{

302 printf("cpu_t=%zu thread=%zu session_t=%zu\n",

306

307 ncpus = (uint32_t)sysconf(_SC_NPROCESSORS_ONLN);

310 return -1;

311 }

312

314

315 /*

316 * XXX: assuming CPU are contiguously indexed

317 */

321 return -1;

322 }

323 return 0;

324}

Definition: libpfms.c:40

Definition: libpfms.c:47

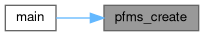

Here is the caller graph for this function:

◆ pfms_load()

| int pfms_load | ( | void * | desc | ) |

Definition at line 468 of file libpfms.c.

469{

470 uint32_t k;

472 int ret;

473

474 if (desc == NULL) {

476 return -1;

477 }

479

482 return -1;

483 }

484 /*

485 * send create context order

486 */

490 sem_post(&tds[k].cmd_sem);

491 }

492 }

493

495

496 ret = 0;

497

498 /*

499 * check for errors

500 */

504 if (ret) {

506 break;

507 }

508 }

509 }

510

511 /*

512 * if error, unload all others

513 */

519 sem_post(&tds[k].cmd_sem);

520 }

521 }

522 }

523 }

524 return ret ? -1 : 0;

525}

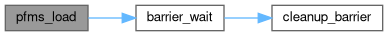

Here is the call graph for this function:

Here is the caller graph for this function:

◆ pfms_read_pmds()

| int pfms_read_pmds | ( | void * | desc, |

| pfarg_pmd_t * | pmds, | ||

| uint32_t | n | ||

| ) |

Definition at line 660 of file libpfms.c.

661{

663 uint32_t k, pmds_per_cpu;

664 int ret;

665

666 if (desc == NULL) {

668 return -1;

669 }

671

674 return -1;

675 }

678 return -1;

679 }

680 pmds_per_cpu = n / s->ncpus;

681

683

689 sem_post(&tds[k].cmd_sem);

690 pmds += pmds_per_cpu;

691 }

692 }

694

695 ret = 0;

696

697 /*

698 * check for errors

699 */

703 if (ret) {

705 break;

706 }

707 }

708 }

709 /*

710 * cannot undo pfm_read_pmds

711 */

712 return ret ? -1 : 0;

713}

Here is the call graph for this function:

Here is the caller graph for this function:

◆ pfms_start()

| int pfms_start | ( | void * | desc | ) |

Definition at line 584 of file libpfms.c.

585{

587}

static int __pfms_do_simple_cmd(pfms_cmd_t cmd, void *desc, void *data, uint32_t n)

Definition: libpfms.c:528

Here is the call graph for this function:

Here is the caller graph for this function:

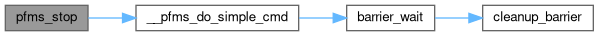

◆ pfms_stop()

| int pfms_stop | ( | void * | desc | ) |

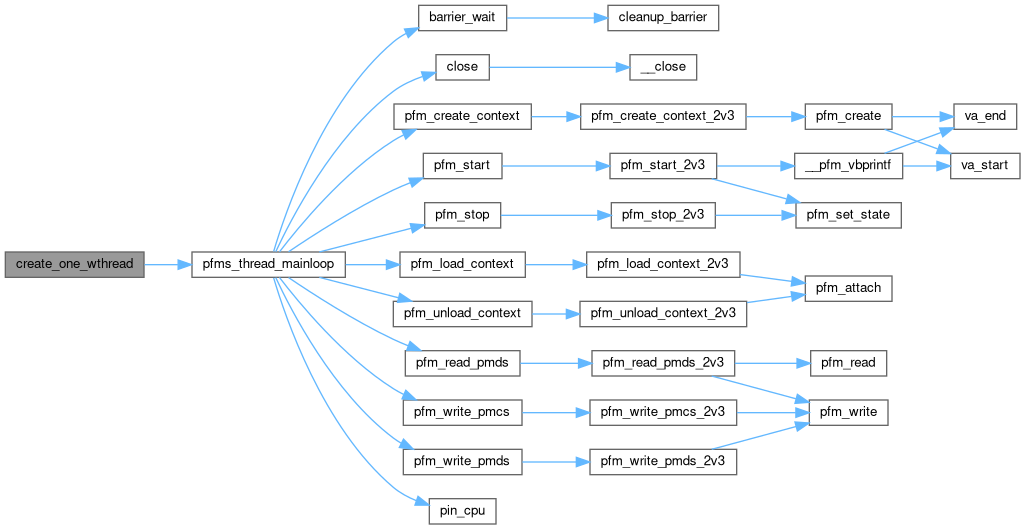

◆ pfms_thread_mainloop()

|

static |

Definition at line 172 of file libpfms.c.

173{

175 uint32_t mycpu = (uint32_t)k;

176 pfarg_ctx_t myctx, *ctx;

177 pfarg_load_t load_args;

178 int fd = -1;

179 pfms_thread_t *td;

180 sem_t *cmd_sem;

181 int ret = 0;

182

183 memset(&load_args, 0, sizeof(load_args));

184 load_args.load_pid = mycpu;

185 td = tds+mycpu;

186

187 ret = pin_cpu(mycpu);

189

191

192 for(;;) {

194

195 sem_wait(cmd_sem);

196

199 ret = 0;

200 break;

201

203

204 /*

205 * copy context to get private fd

206 */

207 ctx = td->data;

208 myctx = *ctx;

209

210 fd = pfm_create_context(&myctx, NULL, NULL, 0);

211 ret = fd < 0 ? -1 : 0;

213 break;

214

216 ret = pfm_load_context(fd, &load_args);

218 break;

220 ret = pfm_unload_context(fd);

222 break;

224 ret = pfm_start(fd, NULL);

226 break;

228 ret = pfm_stop(fd);

230 break;

234 break;

238 break;

242 break;

245 ret = close(fd);

246 fd = -1;

247 break;

248 default:

249 break;

250 }

251 td->ret = ret;

252

254

256 }

257}

int errno

os_err_t pfm_write_pmds(int fd, pfarg_pmd_t *pmds, int count)

Definition: pfmlib_os_linux_v2.c:528

os_err_t pfm_write_pmcs(int fd, pfarg_pmc_t *pmcs, int count)

Definition: pfmlib_os_linux_v2.c:520

os_err_t pfm_create_context(pfarg_ctx_t *ctx, char *smpl_name, void *smpl_arg, size_t smpl_size)

Definition: pfmlib_os_linux_v2.c:483

os_err_t pfm_load_context(int fd, pfarg_load_t *load)

Definition: pfmlib_os_linux_v2.c:417

os_err_t pfm_read_pmds(int fd, pfarg_pmd_t *pmds, int count)

Definition: pfmlib_os_linux_v2.c:536

Definition: perfmon_v2.h:18

Definition: perfmon_v2.h:68

Definition: perfmon_v2.h:27

Definition: perfmon_v2.h:38

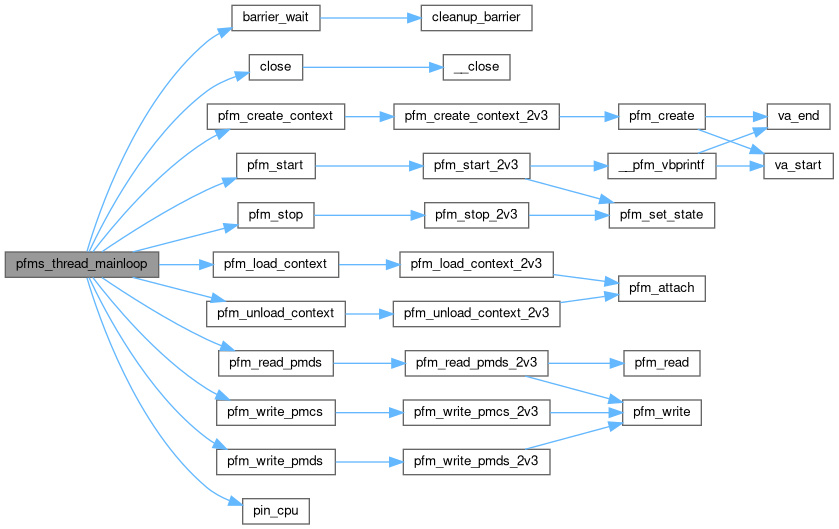

Here is the call graph for this function:

Here is the caller graph for this function:

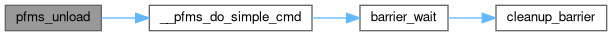

◆ pfms_unload()

| int pfms_unload | ( | void * | desc | ) |

◆ pfms_write_pmcs()

| int pfms_write_pmcs | ( | void * | desc, |

| pfarg_pmc_t * | pmcs, | ||

| uint32_t | n | ||

| ) |

◆ pfms_write_pmds()

| int pfms_write_pmds | ( | void * | desc, |

| pfarg_pmd_t * | pmds, | ||

| uint32_t | n | ||

| ) |

◆ pin_cpu()

|

static |

Definition at line 146 of file libpfms.c.

147{

148 uint64_t *mask;

149 size_t size;

150 pid_t pid;

151 int ret;

152

153 pid = syscall(__NR_gettid);

154

156

157 mask = calloc(1, size);

158 if (mask == NULL) {

160 return -1;

161 }

162 mask[cpu>>6] = 1ULL << (cpu & 63);

163

164 ret = syscall(__NR_sched_setaffinity, pid, size, mask);

165

166 free(mask);

167

168 return ret;

169}

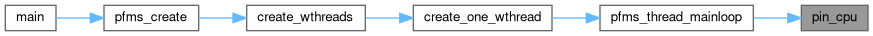

Here is the caller graph for this function:

Variable Documentation

◆ ncpus

◆ tds

|

static |

◆ tds_lock

|

static |