News and Announcements

China’s New Homegrown Supercomputer

A Chinese supercomputer from the Sunway company, called the Sunway BlueLight MPP, was unveiled on October 30th. This system utilizes 8,700 Chinese ShenWei SW1600 processors, or 139,364 cores, and is the third generation CPU by Jiāngnán Computing Research Lab. Operating at .975GHz, the processor has a peak performance of 125GFLOPS floating point performance from its 16-core RISC architecture. The CPU is a national key collaborative laboratory project by Jiāngnán Computing Research Lab and High Performance Services & Storage Technologies. The system was assembled at the National Supercomputer System in Jinan, China.

The New York Times caught up with Jack Dongarra for his take on China’s latest effort in HPC. Dongarra noted that the Sunway system achieves 74% of its theoretical peak performance, which is the same percent of peak achieved by the ORNL Jaguar system—the fastest supercomputer in the United States—but Sunway consumes only a fraction of the energy. This is intriguing, says Dongarra, since one of the principal challenges in Exascale computing will be power consumption. Click here to read the entire NYT article.

Titan: Jaguar Gets the GPU Treatment

Starting this fall, the folks at ORNL will begin upgrading Jaguar’s current Cray XT5 hardware with Cray XK6 blades which are built on the AMD 16-core processor and NVIDIA Fermi GPUs. Once the process is complete, sometime in late 2012, Jaguar will become Titan—a true giant capable of 20 petaflops or greater, and one of the fastest supercomputers on the planet.

The new Cray XK6 blades will replace the existing XT5 hardware in most of Jaguar’s 18,688 nodes, removing the current dual-socket 6-core AMD Opteron CPU configuration and replacing it with a single-socket 16-core “Interlagos” CPU setup. Jaguar’s interconnect will also be upgraded from SeaStar 2 to Gemini. Initial GPU implementations will include 960 NVIDIA “Fermi” GPUs mated to the new XK6 nodes. However, in 2012, ORNL plans to deploy up to 18,000 GPUs based on NVIDIA’s new “Kepler” architecture, which NVIDIA claims will have double the performance per watt of “Fermi,” while staying in the same performance envelope.

According to NVIDIA, if the Titan system hits its performance goal, it would be more than two times faster and three times more energy efficient than today’s fastest supercomputer, the K computer, which is housed at the RIKEN Advanced Institute for Computational Science (AICS) in Japan.

SC ’11

This year’s annual Supercomputing conference (SC) will be held November 12-18th at the Washington State Convention Center in Seattle, WA. As usual, we expect to have a considerable presence at the conference with BoFs, papers, posters, etc. Below is a schedule of ICL-related activities. For an entire list of activities, visit the SC ’11 schedule page.

| Sunday, 13th | Tutorial – Linear Algebra Libraries for High-Performance Computing: Scientific Computing with Multicore and Accelerators, Room TCC LL5, 8:30am – 5:00pm |

| Monday, 14th | Workshop – Scalable Algorithms for Large-Scale Systems, Grand Hyatt Princessa II, 9:00am – 5:30pm |

| Tuesday, 15th | Paper – Optimizing Symmetric Dense Matrix-Vector Multiplication on GPUs, Room TCC 305, 10:30am – 11:00am |

| Paper – Parallel Reduction to Condensed Forms for Symmetric Eigenvalue Problems using Aggregated Fine-Grained and Memory-Aware Kernels, Room TCC 305, 11:30am – 12:00pm | |

| BoF – The 2011 HPC Challenge Awards, TCC LL2, 12:15pm – 1:15pm | |

| Poster – New Features of the PAPI Hardware Counter Library, WSCC North Galleria 2nd/3rd Floors, 5:15pm – 7:00pm | |

| BoF – Top500 Supercomputers, TCC 301/302, 5:30 – 7:00pm | |

| Wednesday, 16th | BoF – Open MPI State of the Union, Room TCC 303, 12:15pm – 1:15pm |

| BoF – The IESP and EESI Efforts, Room TCC 304, 5:30pm – 7:00pm | |

| ICL SC’11 Alumni Dinner, Steelhead Diner, 95 Pine Street, Seattle, WA., 7:00pm |

The ICL SC ’11 Alumni Dinner will be on Wednesday November, 16th at 7:00pm at Steelhead Diner, 95 Pine Street, Seattle, WA. Please RSVP to Tracy Rafferty by Monday, November 14th. See the restaurant’s website for menus and contact information.

JICS/IGMCS Seminar Series

This fall, staff from many parts of the University will participate in a series co-hosted by the Joint Institute for Computational Science (JICS) and the Interdisciplinary Graduate Minor in Computational Sciences program (IGMCS). These organizations will offer a series of tutorials and seminars designed to provide UTK students, faculty, and staff with practical information about using UTK’s computational science resources, as well as other associated opportunities for participation and collaboration.

Seminars and tutorials in the series will be given by personnel from the National Institute for Computational Sciences (NICS), the Remote Data Analysis and Visualization Center (RDAV), UTK’s Innovative Computing Laboratory (ICL), and OIT’s Research Computing Support team. The series will be held on Thursdays starting at 2pm, in Claxton 233. All interested students, faculty, and staff are welcome and encouraged to attend. For more information, including a detailed list of speakers, please visit the Seminar Series website.

Conference Reports

IESP Workshop – October 2011

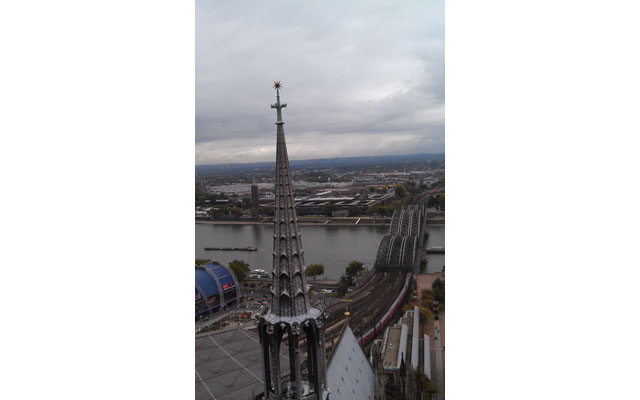

During the first week of October, the International Exascale Software Project (IESP) held its seventh meeting, this time in Cologne, Germany. Once again, ICL (i.e. Jack, Tracy R., Teresa, and Terry) helped to organize and run the meeting, but the agenda was largely the responsibility of the IESP’s EU contingent. Although there were, as usual, reports in the plenary sessions on developments in leading edge HPC projects from all the participating countries and continents (viz., the US, China, Japan, and the EU), the focus of this meeting was definitely on progress in Europe’s main effort, the European Exascale Software Initiative (EESI).

Presentations on different aspects of EESI revealed the ways in which its forthcoming plan for exascale is both distinctive and aggressive: it is distinctive because it involves cooperation and collaboration with private industry (e.g. aerospace, energy, biotech, etc.) of a kind and to a degree unheard of in US HPC software R&D; and it is aggressive because, even under the current difficult economic conditions, the EESI leadership shows every confidence of having mobilized the resources necessary to actually start a comprehensive exascale software project well ahead of anything similar in the United States.

The organizers tasked two of the breakout sessions with extending the work that had come to the fore at the San Francisco meeting in April, with one focused on co-design, and the other focused on the software sustainability lifecycle. But a third breakout session explored the question of whether, in terms of plausible execution models for exascale computing, there were “revolutionary” alternatives to the “evolutionary” approaches that are currently under consideration, and if so, whether or not such a radical paradigm shift might turn out to be indispensable to success. The leaders of all the breakout groups plan to contribute to documents that summarize the thinking of their groups and the conclusions they reached.

IEEE Cluster ’11

ICL’s Peng Du, Teng Ma, and Thomas Herault all attended this year’s IEEE Cluster ’11 conference on September 26-30th in Austin, TX. According to Peng, there were about 200 people in attendance, including some folks from AMD who were kind enough to give Peng et al. a tour of AMD’s nearby campus, where the three observed AMD’s OpenCL test lab and several cluster systems.

Peng presented a paper in the fault tolerance session on High Performance Dense Linear System Solver with Soft Error Resilience. Teng Ma presented a paper on Process Distance-aware Adaptive MPI Collective Communications, and Thomas presented two papers, one on Performance Portability of a GPU Enabled Factorization with the DAGuE Framework, and another on Scalability for MPI Runtime Systems.

Recent Releases

PAPI 4.2.0 Released

The PAPI 4.2.0 release is now available for download.

This package contains:

- New release now builds for linux platforms using the libpfm4 interface by default.

- New platform support is provided for AMD Bobcat, Intel Sandy Bridge, and ARM Cortex A8 and A9.

- Preliminary support is also provided for MIPS 74K.

- We have reviewed and updated all doxygen generated man pages for documentation. Doxygen documentation can be found here.

- Several components, particularly the CUDA component, have been updated, and a test environment for component tests has been implemented.

- Two new utilities have been added: papi_error_codes and papi_component_avail.

- A host of bug fixes and code clean-ups have been implemented.

For a summary of changes, read the PAPI 4.2.0 Release Notes. For installation instructions read the Installation Notes.

Visit the Software Page to download the tarball.

Interview

Hartwig Anzt

Where are you from, originally?

Born in Karlsruhe, south-west Germany, I loved this place from the beginning on. So I decided to stay there, not only for school, but also for University. But as people told me, there exist other beautiful cities in the world, so I spent one year in Ottawa, Canada, studying at the University of Ottawa. But after a very cold and snowy winter, I preferred to return back to Karlsruhe for my PhD.

Can you summarize your educational background?

Having finished school in 2004, I started studying Technomathematics at the University of Karlsruhe. During my studies in Canada, my home University was restructured and renamed as the Karlsruhe Institute of Technology. After graduating in 2009, I started working as a research assistant and PhD student at the Institute for Applied and Numerical Mathematics.

Tell us how you first learned about ICL.

In my Diploma thesis, I analyzed the performance of mixed precision iterative refinement solvers on graphics processing units. During my research I found different papers covering this topic that were published by members of the ICL.

What made you want to visit ICL?

While I had already taken a close look at the papers published by the ICL during my Diploma research, I finally met Jack Dongarra at a conference in Iceland. I found a strong match in the topics addressed at the ICL and my personal research interests.

What are your research interests?

I am especially interested in the topic of power-aware high performance computing, and how we can adapt numerics and hardware to optimize the energy consumption of scientific applications. Therefore, my focus is on how to efficiently use hardware platforms. This includes the topic of CUDA programming on the implementation side as well as numerical methods like mixed precision iterative refinement, Krylov subspace solvers for the solution of sparse or dense linear systems. But I am also interested in fault-tolerant computing and multigrid methods.

What are you working on during your visit with ICL?

Currently I am working on the efficient implementation of iterative methods on GPUs. This will eventually also include the utilization of power-saving techniques provided by the hardware devices.

What are your interests/hobbies outside work?

Going into the wilderness feels like coming home! This is why I would consider myself an outdoor freak. No matter whether going by bike, canoe, kayak, rope, or just hiking, I use every opportunity to spend as much time as possible in the nature.

I am also very much into sports: soccer, rowing, boxing, muay thai, marathon, triathlon…

Tell us something about yourself that might surprise people.

I know how to save a life.