May 2007

|

Tele-Immersive Environments for Geographically Distributed Interaction and Communication

|

May 2007 |

During the last 10 years, several disparate technologies and science, notably in computer vision, computer graphics, distributed computing, and broadband networks have come together and facilitated geographically distributed tele-immersive environments in which people can interact and communicate.

We have built such 3D tele-immersive (TI) environments at the University of Illinois, Urbana-Champaign (UIUC) and University of California, Berkeley (UCB).1 The TI environments deploy large-scale 3D camera networks that capture, digitize, and reconstruct three dimensional images and sounds of moving people, as well as integrate and render the multimedia data from geographically distributed sites into a joint virtual space at each TI site. The reasons for deploying 3D TI environments, instead of just as an available video conferencing technology, are as follows: (1) the video conferencing displays only individual 2D video streams/pictures (though next to each other) on the screen, hence no joint virtual space can be created and no real interaction is feasible; (2) the 3D environment facilitates different views of interaction from any viewpoint, as the viewer desires, and with different digital options, such as different scales of participants and different people/scene orientations that create physically impossible views; and (3) with the 3D TI environment, one can easily integrate synthetic objects and other environments into the current immersive environment.

While there have been other demonstration projects of this nature (e.g., 2, 3, 4, 5, 6) they were restricted to the video sequences and special dedicated network connectivity. We are aiming to create 3D TI environments from COTS components for a common user who does not have the luxury of expensive supercomputing facilities, special purpose camera hardware and dedicated networks.

Yet we see the opportunity to use this kind of technology for exploring geographically distributed interaction and communication of people in which the physical/body interaction is important. We have explored this interaction in the domain of dance, since dance is a way of physical communication. However, we also see many other applications such as remote physiotherapy, collaboration between distributed scientists working on common multidimensional data sets, design of artifacts (architecture, mechanical, chemical and electrical designs), planning for coordinated activities and their like.

In this article, we will describe the individual components comprising the UIUC and UCB TI environments, as well as the challenges and few solutions that allowed us to execute one of the very first public performances in geographically distributed collaborative dancing that took place in December 2006. We will briefly describe the experiment as well as lessons learned from this exciting and very successful performance.

Tele-Immersion is aimed to enable users in geographically distributed sites to collaborate in real time in a shared simulated environment as if they were in the same physical room. It combines computer vision, graphics and network communications to create joint virtual spaces for each participant at each TI site. As Figure 1 shows, each 3D TI site (UIUC and UCB) consists of Gigabit Ethernet networking that connects an array of 3D cameras, service computing PCs infrastructure, and displays/projectors into a coherent 3D capture and display environment. The TI sites are then connected via Internet2 for bilateral data exchange.

More precisely, the UCB TI site deploys 12 stereo clusters, where each cluster consists of four 2D cameras creating 3D video streams from each cluster (i.e., 48 2D cameras), eight IR pattern projectors to improve the 3D vision reconstruction, three microphones and four speakers, 13 PCs with two or four CPUs, running Windows XP, and two projectors for passive stereo projection, using circular polarization. This physical hardware provides 360 degree video stereo capturing capability, full-body 3D reconstruction, real-time data recording, real-time rendering and networking connectivity via Gigabit Ethernet to Internet 2.

At UIUC, the TI site deploys eight stereo clusters, one microphone and speaker, 11 PCs with two or four CPUs, running Windows XP, two plasma displays and two 3D displays. This physical hardware provides 100 degree video stereo capturing capability, full-body 3D reconstruction, real-time data recording, real-time rendering on multiple displays, and networking connectivity via Gigabit Ethernet to Internet 2. Figure 2 shows the experimental laboratories (left UIUC Laboratory, right UCB Laboratory), which serve as dance studios for the current collaborative dance experiments as described below.

To enable real-time interactive dance choreography in tele-immersive environments, multiple challenges need to be overcome. Some of the challenges have been solved, others are still in progress.

One of the major challenges is to accomplish 3D real-time video reconstruction. The 3D reconstruction algorithms are still lacking behind the 2D video capabilities in real-time performance due to their algorithmic complexity as well as inefficient implementation. A second major challenge is the robust calibration of 3D cameras under different light conditions, mobility and other environmental changes that happen in TI sites.

The current solutions in the 3D real-time reconstruction space currently rely on an image-based trinocular stereo algorithm7 to calculate the depth information for each pixel. The output from one 3D camera cluster corresponds to one stream of 3D frames containing both color and depth information. All cameras are synchronized via hotwires to take shots simultaneously. They are calibrated with a self-calibration algorithm by Svoboda et al.8 Figure 3 shows some of the challenges and solutions/results in the 3D vision space.

One of the major challenges in TI networking is the bandwidth management and appropriate semantic networking protocols to provide Quality of Service (QoS) on top of the best effort heterogeneous Gigabit Ethernet/Internet 2 networks.

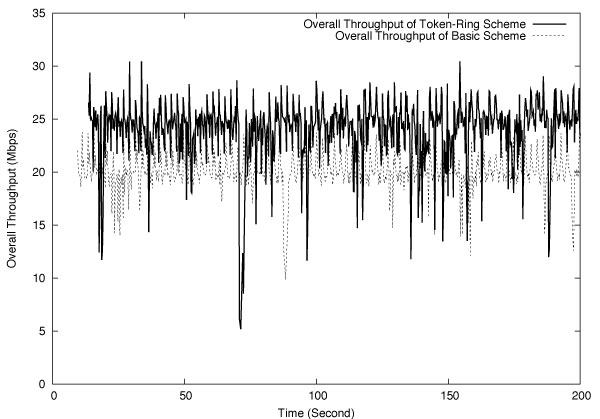

The current networking solution relies on our semantic-aware, session data protocol within the service middleware layer that runs on top of the TCP/IP protocol stack. It executes 3D video stream compression as well as end-to-end multi-tier streaming using bandwidth estimation, context-aware bandwidth allocation and rate adaptation. The service middleware layer provides the control plane functionalities for the bandwidth management, such as the multi-stream coordination and adaptation algorithms, depending on the end-to-end Internet 2 bandwidth availability and user view selections. Furthermore, the control functions include display setup with selection of scene views and screen layouts at the viewer site. Figure 4 shows some of the networking challenges and solutions/results in the semantic networking space.

We conducted a public dance performance in December 20069 where the dancers at UIUC and UCB laboratories danced jointly in cyberspace, and the jointly rendered visual performance was then transmitted to the lobby of the Hearst Mining Building at UCB for an audience to watch. We began the planning of the collaborative dance experiment with the hypothesis that dance is a form of communication, even if it is conducted in cyberspace. Hence, we posed many questions prior to the performance, and we will elaborate on some of them: (1) How do we create meaning with movement in cyberspace? (2) Is the meaning of dance the same for the audience when seeing physical dancers and virtual dancers? (3) Is the choreography the same when dancing in physical space, in virtual space, and in hybrid spaces? Before we provide answers to our questions, we will describe the dancing experimental setup and additional TI technological setup for the performance.

Dancing Experimental Setup: During the experiment, the overall dance choreography consisted of four components:

Figure 5 shows an image of two dancers rendered and shown together during the performance. Both dancers are first in the UCB TI Lab before being joined by a third dancer from UIUC in cyberspace. The performance started with dance segments in cyberspace where the geographically distributed dancers presented, in a synchronized fashion, different types of movements, including meeting, chatting, and hugging in cyberspace; collaborative rowing of a boat; use of standard improvisation scores such as mirroring; following a leader; creating foreground and background visual information; use of contact or virtual contact; and many other movements natural in physical spaces but very different in cyberspace. Once the dance in cyberspace finished, the live dancers continued with their dance choreography in the physical space.

TI Technological Experimental Setup: Before the public performance, we had to perform geometric, photometric calibration at both sites in order to assure that colors, the size of performers and the space was coordinated and looked realistic. Once the performance started, our TI protocols transmitted 3D streams between UCB and UIUC to provide a synchronous virtual space at both sites. In addition to the Common Virtual Space, we had voice communication (Voice over IP) on both sites, which was necessary for the dance coordination and synchronization. The music to which the performers were dancing was shared, the beginning of the music playback was synchronized after the initial synchronization, and the playback was executed locally in order to avoid any delays. At the UIUC site, we had also enabled 2D web cameras to show the live studio scenes in the UCB and UIUC TI studios.

Audience Feedback: The audience received questionnaires to share with us their overall perception. The overall feedback was positive, since the networking performance of the dance in cyberspace was very stable and robust for the 40 minute performance duration. The dancers danced in a synchronized fashion, which validated our 3D real-time vision and networking approaches. The TI system streamed the data well compared to the web camera. The strong time synchronized performance of our system was especially visible when contrasted with the web cameras, which showed a delay of several seconds when delivering the real TI studio scenes. The audience understood that without our TI solutions, i.e., if we used only web cameras, (a) the dancers would not be able to dance in collaborative fashion, (b) the dancers would not be able to be rendered into a common, joint virtual space, and (c) the dancers would not be able to experiment with movements as they are used to on a real stage. In TI virtual space, the dancers were able to create a very pleasing set of movements that were truly enjoyable to watch.

The December 2006 performance definitely gave new meaning to collaborative dancing and creative choreography. Through this performance we have learned new lessons that lead us to new symbiotic relationships between creative dance design and new TI technology elements.

TI Technology Lessons Learned: The 3D real-time vision algorithms need further improvement as we discussed in Section 3 and showed in Figure 3. The dancers had considerable visual “holes” and their movements were slower than real-time since the TI system is currently not able to reconstruct 3D frames at higher speed than 10 3D frames per second. The network protocols need further improvements with respect to bandwidth and content adaptation, strong support for better extracting and understanding of semantic information about dancer location, and importance of presented views to the audience, resulting from the 3D vision algorithms, dancers and audience.

Collaborative Dance Lessons Learned: Through this experiment, we have answered the first set of questions discussed above. Movement in virtual spaces makes sense for artists. Due to their improvisation skills, they cope very well with the various imperfections of the TI technology, such as delays, bandwidth constraints, and visual holes. Actually, they use it for their artistic advantage and create very visually pleasing dance segments. However, the experiments also show that dancing in cyberspace is very different than in physical space. For example, with the sense of virtual touch feedback, if one raises a hand in the cyber environment to touch a virtual object or person and there is no physical object to touch, different reactions in dancers are produced. It has become very clear that a new type of choreography must be created when considering dancing in TI environments. But it is precisely this new type of creative interaction with the technology that makes it so exciting for dancers and choreographers. The art form of dance choreography that inherently values the different ways in which individuals move and relate to one another is a good fit with TI technology, which inherently values the entire body of an individual streaming into cyberspace.

The possibility to arrange bodies/individuals in infinite new ways in relationship to each other and virtual objects, as well as the ability to create entirely new meanings and contexts through this creative coordination, has the potential to have a huge impact on the field of choreography and on many other fields that prioritize the study of the body.

3D TI environments are becoming more viable and more affordable. As we have shown, it is possible to build TI environments out of COTS components and engage them in geographically distributed interaction and communication. Furthermore, with the maturity and advancement of TI technology, we are experiencing a very natural symbiosis between collaborative dancing and TI technology as it was shown in our experiment between UCB and UIUC in December 2006. This symbiosis creates feedback in both ways. The TI technology becomes richer in terms of its digital options to allow dancers to explore more and more physically impossible situations in the virtual spaces and hence create very new choreography designs.