ICL 35th Anniversary

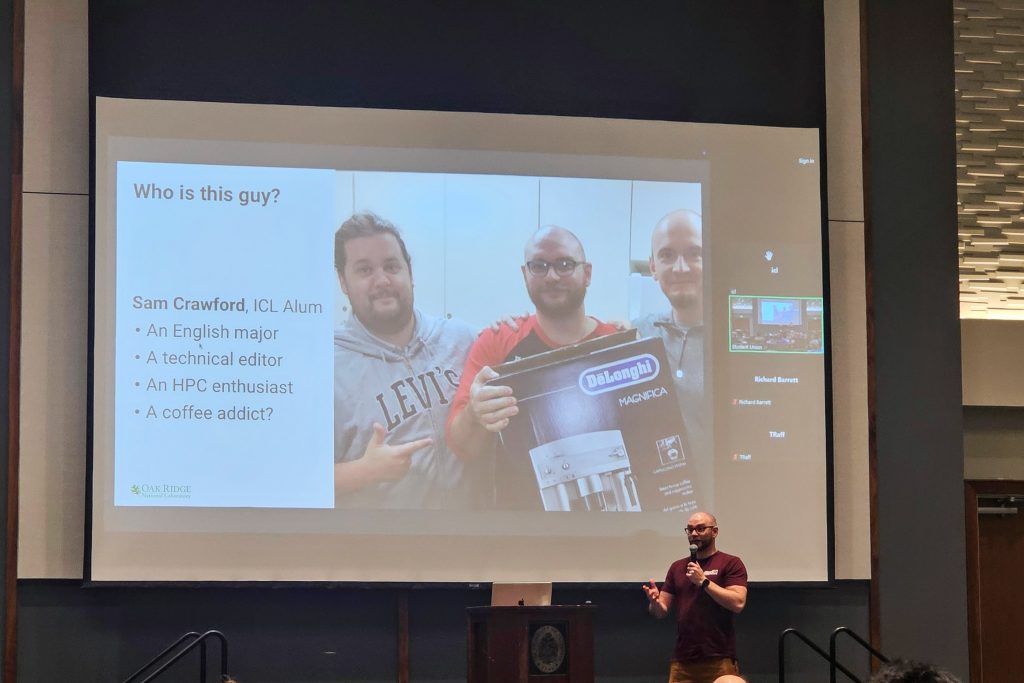

In May 2025, ICL celebrated its 35th year of Innovative Computing with a special anniversary workshop. The event, held on May 29-30, 2025, brought together ICL alumni, current members, and friends for two days of presentations, discussions, and networking opportunities. The agenda featured 38 speakers along with 2 panel sessions and a breakout discussion over the course of the 2-day event. Thank you to everyone who contributed to making this event a memorable success.

Event Photos

Commemorative Slideshow

ICL Students Gain Industry Experience Through Summer Internships

Tatiana Melnichenko is spending the summer at Oak Ridge National Laboratory as a participant in the Science Undergraduate Laboratory Internship (SULI) program. She is working with Dr. William Godoy on advancing performance portability in the Julia programming language.

Tatiana Melnichenko is spending the summer at Oak Ridge National Laboratory as a participant in the Science Undergraduate Laboratory Internship (SULI) program. She is working with Dr. William Godoy on advancing performance portability in the Julia programming language.

Dong Jun Wuon is interning at Amazon as part of the Kuiper Project, which seeks to expand global internet access through low-Earth orbit satellites. As a member of the security team, he is developing a more robust testing framework to evaluate the system’s security, reliability, and performance.

Dong Jun Wuon is interning at Amazon as part of the Kuiper Project, which seeks to expand global internet access through low-Earth orbit satellites. As a member of the security team, he is developing a more robust testing framework to evaluate the system’s security, reliability, and performance.

Jack Dongarra Warns of an HPC Turning Point

ICL founder and Turing Award recipient Jack Dongarra recently penned a compelling opinion piece in The Conversation titled “Challenges to high-performance computing threaten US innovation”, highlighting critical challenges facing U.S. high-performance computing.

In the article, Dongarra emphasizes that:

- HPC is now at a “turning point” as HPC systems face more pressure than ever, with higher demands on the systems for speed, data, and energy, amidst a surge in the importance of artificial intelligence that is having dramatic effects on the landscape.

- The memory-processor performance gap remains a core bottleneck—like “a superfast car stuck in traffic.”

- Energy consumption is growing unsustainable, as Dennard scaling gains have stalled.

- The shift in chip design toward lower-precision AI workloads risks leaving science-driven applications that require 64-bit precision behind.

- Unlike past singular federal HPC funding moments, the U.S. lacks a clear, long-term national strategy—spanning hardware, software, workforce training, and public–private partnerships.

- But there are hopeful signs: investment through the CHIPS and Science Act, momentum behind quantum technologies, and the recent launch of the Vision for American Science and Technology task force are encouraging.

Dongarra concludes that to remain competitive in science, innovation, and national security, the U.S. must commit to a cohesive, long-term HPC strategy.

Read the full article on The Conversation

HPCwire Podcast Discussion

Addison Snell and Doug Eadline discussed Jack’s article in a recent episode of This Week in HPC. The discussion of this topic begins shortly after the 8 minute mark of the episode:

Jack Dongarra Delivers Keynote at ORNL’s Inaugural AI4Science Workshop

Jack Dongarra gave the opening keynote at the first AI4Science Workshop hosted by Oak Ridge National Laboratory in April 2025. The workshop brought together researchers exploring the intersection of artificial intelligence and scientific computing. In his keynote, Dongarra reflected on the evolution of high-performance computing and offered insights into how AI-driven methods are shaping the future of scientific discovery.

Congratulations

Heike Jagode named Recipient of the 2025 TCE Research Award for Research Faculty

ICL is proud to report that Heike Jagode was selected by TCE as the inaugural recipient of the 2025 TCE Research Award for Research Faculty. Heike received the award at the TCE Awards Dinner on May 1 at Bridgewater Place.

At the same event, ICL alum Scott Wells was recognized with the staff Inspirational Leadership Award. Congratulations to Heike and Scott!

At the same event, ICL alum Scott Wells was recognized with the staff Inspirational Leadership Award. Congratulations to Heike and Scott!

Conference Reports

State-of-the-Art GPU Numerical Computing: Honoring Dr. Stanimire Tomov

The University of Tennessee’s Innovative Computing Laboratory (ICL) hosted a two-day workshop honoring the life, legacy, and scientific contributions of Dr. Stanimire Tomov. Stan was an extraordinary researcher, a respected leader, and a cherished member of ICL for over two decades. He passed away on October 11, 2024, leaving a profound and lasting impact on the fields of GPU computing and dense linear algebra.

The University of Tennessee’s Innovative Computing Laboratory (ICL) hosted a two-day workshop honoring the life, legacy, and scientific contributions of Dr. Stanimire Tomov. Stan was an extraordinary researcher, a respected leader, and a cherished member of ICL for over two decades. He passed away on October 11, 2024, leaving a profound and lasting impact on the fields of GPU computing and dense linear algebra.

In tribute to his contributions and spirit, State-of-the-Art GPU Numerical Computing brought together colleagues, collaborators, and former students for two days of technical talks and shared memories. The workshop featured presentations on cutting-edge advances in numerical computing, along with time to reflect on Stan’s influence, mentorship, and friendship.

Stan led the MAGMA project and served as a research director at ICL before joining NVIDIA in 2024. His technical brilliance, collaborative spirit, and deep kindness helped shape the culture and success of ICL. This workshop was an opportunity to celebrate his legacy and continue the conversations he inspired.

ISC 2025

Held June 10–13 in Hamburg, Germany, ISC High Performance 2025 drew 3,585 attendees and 195 exhibitors from 54 countries, marking the largest and most successful edition in its 40‑year history under the theme “Connecting the Dots”. The event featured keynotes, panels, workshops, an expansive exhibit floor, and networking sessions underscoring the rapidly evolving landscape of HPC, AI, and quantum computing.

TOP500 Results: El Capitan Holds the Crown

Photo: PHILIP LOEPER

The 65th edition of the TOP500 list was unveiled at ISC2025. Together with updated HPCG, HPL-MxP, and Green500 lists, these rankings provide a comprehensive view of the current supercomputing landscape:

TOP500 (HPL)

- El Capitan (LLNL) once again secured #1, achieving an impressive 1.742 exaFLOPS using its 11 million+ cores powered by AMD EPYC CPUs and MI300A GPUs connected via Slingshot‑11 interconnect

- Frontier (ORNL) maintained its position at #2 with 1.353 exaFLOPS, leveraging AMD 3rd‑gen EPYC CPUs and MI250X GPUs across nearly 8.7 million cores

- Aurora (Argonne) took #3, hitting 1.012 exaFLOPS with its Intel Xeon Max CPUs and Data Center GPU Max accelerators

HPCG

- El Capitan is the new leader on the HPCG benchmark with 17.1 HPCG-PFlop/s.

- Supercomputer Fugaku, the long-time leader, is now in second position with 16 HPCG-PFlop/s.

- The DOE system Frontier at ORNL remains in the third position with 14.05 HPCG-PFlop/s.

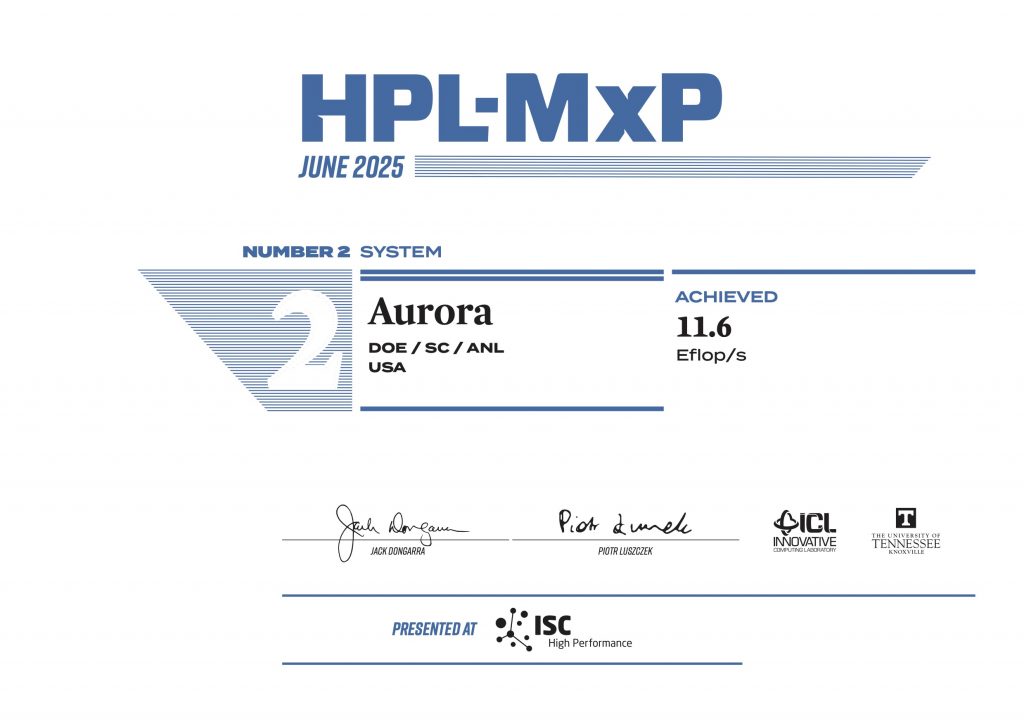

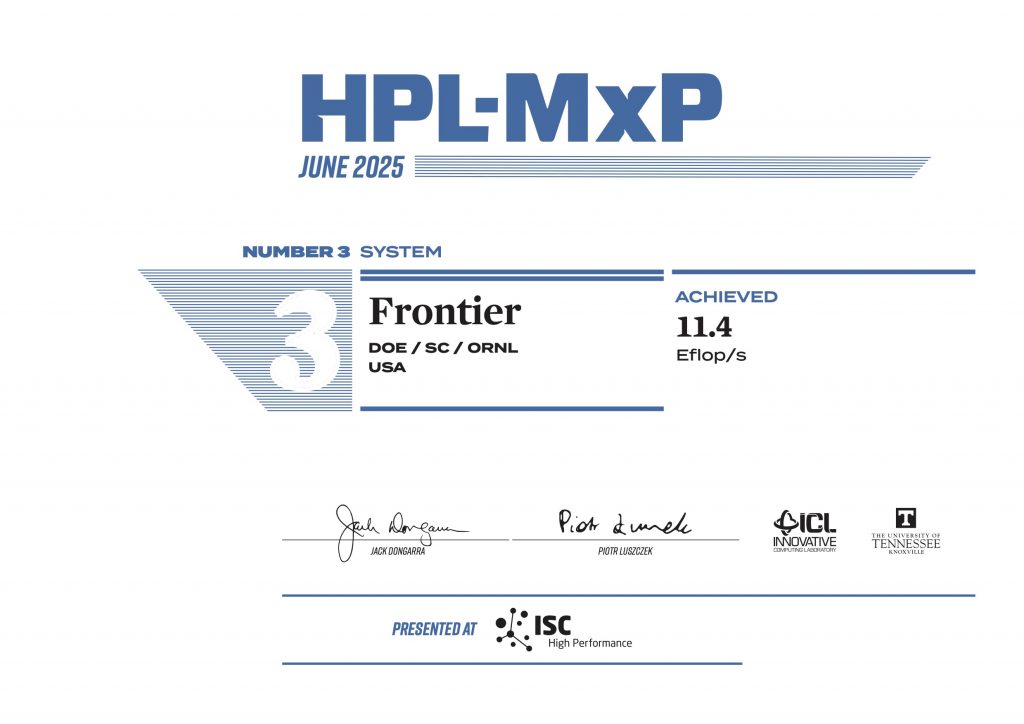

HPL-MxP

- El Capitan led again with 16.7 exaFLOPS, showcasing its aptitude for AI-style mixed‑precision workloads

- Aurora reached 11.6 exaFLOPS, securing the second spot

- Frontier followed closely with 11.4 exaFLOPS

El Capitan delivers across all key benchmarks—HPL, HPCG, and HPL‑MxP—underscoring its excellence in double-precision, memory-bound, and AI/mixed-precision workloads. Notably, El Capitan achieved the No. 26 spot on the GREEN500 with an energy efficiency score of 58.89 GFlops/Watt. Frontier, No. 2 on the TOP500, produced an energy efficiency score of 54.98 GFlops/Watt for this GREEN500 list. Both systems demonstrate that it is possible to achieve immense computational power while also prioritizing energy efficiency.

ICL at ISC: Advancing Mixed-Precision HPC

On June 13, ICL researchers Jack Dongarra, Piotr Luszczek, and Hartwig Anzt hosted a technical workshop titled “Modern Mixed‑Precision Methods: Hardware Perspectives, Algorithms, Kernels, and Solvers.” This half‑day session (9 AM–1 PM in Hall Y10) dove into the rapidly expanding realm of mixed- and multi-precision computing—a critical intersection between HPC and AI.

2025 Jack Dongarra Early Career Award

The 2025 Jack Dongarra Early Career Award, presented on June 10, was awarded to Dr. Lin Gan of Tsinghua University. The committee recognized his exceptional work in scalable algorithm development, performance optimization, and FPGA‑based acceleration frameworks. Dr. Gan delivered his award lecture titled “Exploring the Performance Potential: Lessons from Sunway and Reconfigurable Architectures,” sharing insights into high-performance reconfigurable computing systems.

Free Video Library and Open Access

The entire ISC Invited Program, which was recorded during ISC is now available as an on-demand library for the broader research community. To access them, you will need to register first.

All research papers accepted by the committee for presentation at ISC have now been published in IEEE Xplore as open-access proceedings. One can also find all research papers listed on the ISC event app (Swapcard), along with videos of each speaker presenting their paper, discussing the challenges they address, and outlining their proposed solutions. Finally, photos from the event are available on the ISC flickr account.

Recent Releases

PAPI 7.2.0

PAPI 7.2.0 is now available as the next major release. This release officially introduces two new components: (1) rocp_sdk: Supports AMD GPUs and APUs via the ROCprofiler-SDK interface; (2) topdown: Provides proper support for Intel topdown metrics. PAPI 7.2.0 also introduces preset events for non-CPU devices, starting with CUDA events. In addition, component code has been extended to include a statistics qualifier (e.g., for CUDA events), offering more concise and functional output in the papi_native_avail utility.

Additional Major Changes are:

Component Updates:

- RAPL: Support for Intel Emerald Rapids, and Intel Comet Lake S/H CPUs.

- ROCM/ROCP_SDK:

- Numerous improvements to error handling, shutdown behavior, and initialization in

rocmandrocp_sdkcomponents. - Added multiple libhsa search paths.

- Correct handling if all events are removed.

- Improved interoperability between

rocmandrocp_sdkcomponents.

- Numerous improvements to error handling, shutdown behavior, and initialization in

- CUDA:

- Added

statisticsqualifier to CUDA events, offering more concise and functional output for thepapi_native_availutility. - Added

partially enabledsupport for systems with multiple compute capabilities: <7.0, =7.0, >7.0. - Support for MetricsEvaluator API (CUDA ≥ 11.3).

- Fixed

cuptid_initreturn value and potential overflows.

- Added

- Sysdetect, Coretemp, Infiniband, NVML, Net, ROCM_SMI:

- Improved robustness and memory safety across components.

coretemp:Enabled support for event multiplexing.

- Topdown:

- New component to interface with Intel’s

PERF_METRICSMSR. - Converts raw metrics into user-friendly percentages.

- Provides access to topdown metrics on supported CPUs: heterogeneous Intel CPUs (e.g., Raptor Lake), Sapphire Rapids, Alder Lake, Granite Rapids.

- Integrated

librseqto protectrdpmcinstruction execution.

- New component to interface with Intel’s

Preset Events & CAT Updates:

- AMD Family 17h: Corrected presets for IC accesses/misses.

- ARM Cortex A57/A72/A76: Added/updated preset support.

- CAT: Added scalar operations to vector-FLOPs Benchmarks.

Acknowledgements

This release is the result of contributions from many people. The PAPI team would like to extend a special thank you to Vince Weaver, Willow Cunningham, Stephane Eranian (for libpfm4), William Cohen, Steve Kaufmann, Dandan Zhang, Yoshihiro Furudera, Akio Kakuno, Richard Evans, Humberto Gomes, and Phil Mucci.

For more information, see the PAPI Github page.

Ginkgo 1.10.0

The Ginkgo team is proud to announce the new Ginkgo minor release 1.10.0.

Ginkgo is a high-performance numerical linear algebra library for many-core systems, with a focus on solution of sparse linear systems. It is implemented using modern C++, with GPU kernels implemented for NVIDIA, AMD and Intel GPUs.

The highlights of the new release include:

- Support for bfloat16

- Mixed precision support for distributed matrices

- Pipelined CG solver, and Chebyshev iteration solver

- OpenMP implementation of merge-path based SpMV

The release contains many more additions, improvements, and bug fixes. A full list of the release changes can be found on the Ginkgo Github page.

Interview

Zhuowei Gu

Where are you from originally?

I am originally from Jiangsu, China.

Can you summarize your educational and professional background?

I completed my undergraduate studies at Miami University in Ohio, and earned my master’s degree at New York University. I also spent a year as a research assistant at Columbia University. Currently, I am pursuing a PhD at Saint Louis University, focusing on high-performance computing (HPC) and artificial intelligence (AI).

How did you first hear about ICL, and what made you want to work here?

I first heard about ICL from my advisor, Dr. Qinglei Cao. I’ve seen ICL’s impressive work featured at many top conferences, and I believe there is no better place to dive into HPC research. That’s why I was eager to join ICL.

What are your main research interests and what are you working on while at ICL?

My main research interests lie at the intersection of HPC and AI. I want to explore how HPC can better support large AI models. During my time at ICL, I am focusing on using PaRSEC to accelerate Transformer training, building libraries and AI tools based on task-based runtime systems.

What are your interests/hobbies outside of work?

Outside of work, I enjoy skiing, playing piano and violin, billiards, and I make sure to exercise daily. I’m also a big fan of outdoor activities like hiking.

Tell us something about yourself that might surprise people.

I co-own a small fried chicken food truck in New Jersey with my friends. The business isn’t exactly booming, but it’s a fun side project!