News and Announcements

SIAM/ACM Prize in Computational Science and Engineering

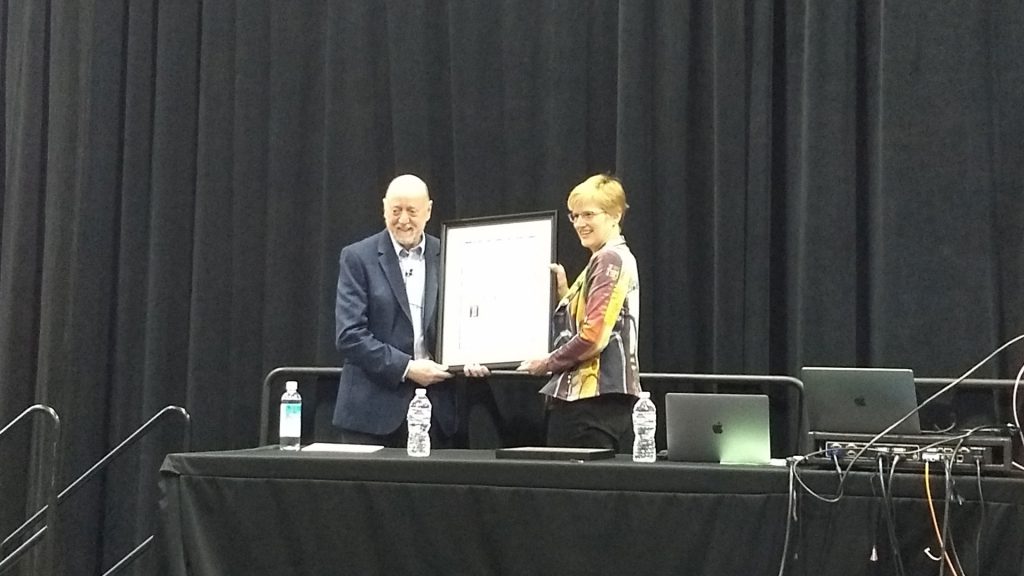

On February 25, 2019, ICL founder and director Jack Dongarra received the SIAM/ACM Prize in Computational Science and Engineering at SIAM CSE19 in Spokane, Washington. This prestigious accolade is awarded every two years by SIAM and ACM in the area of computational science in recognition of outstanding contributions to the development and use of mathematical and computational tools and methods for the solution of science and engineering problems.

Jack received this award for his key role in the development of software and software standards, software repositories, performance and benchmarking software, and in community efforts to prepare for the challenges of exascale computing—especially in adapting linear algebra infrastructure to emerging architectures.

To that end, Jack gave a talk that described the evolution of singular value decomposition (SVD) algorithms for dense matrices and demonstrated the effects of these changes over time by testing various historical and current implementations on a common, modern, multi-core machine and a distributed computing platform. The resulting algorithmic and implementation improvements have increased the speed of SVD by several orders of magnitude while using up to 40× less energy.

VI-HPS Workshop in Knoxville this April

Register by April 2, 2019 for the 31st VI-HPS Tuning Workshop, which will be held at ICL on April 9–12, 2019. This is the latest in a series of hands-on, practical workshops hosted by tool developers for parallel application developers. The Virtual Institute – High Productivity Supercomputing (VI-HPS) is an initiative promoting the development, integration, and use of HPC tools.

Participants are encouraged to bring their own parallel application codes to the workshop to analyze and tune their performance with the help of experts. The workshop will provide an overview of the VI-HPS parallel programming tools suite; explain the tools’ functionality and how to use them effectively; and offer hands-on experience and expert assistance for their use.

Presentations and hands-on sessions are planned for the following topics:

- TAU performance system;

- Score-P instrumentation and measurement;

- Scalasca automated trace analysis;

- Vampir interactive trace analysis;

- MAQAO performance analysis and optimization;

- FORGE/MAP+PR profiling and performance reports; and

- Paraver/Extrae/Dimemas trace analysis and performance prediction.

Click here for the full program or to register for the workshop. Remember, registrations are due on Tuesday, April 2, 2019!

Conference Reports

SIAM Conference on Computational Science and Engineering

On February 25th through March 1st, no less than a dozen members of ICL’s research team attended the SIAM Conference on Computational Science and Engineering (SIAM CSE) in Spokane, Washington, where the city was experiencing record snow and freezing temperatures. By the Editor’s count, this level of participation is second only to SC. Let’s get to it, then.

ICL’s Ahmad Abdelfattah hosted a two-part mini symposium on research activities with Batched BLAS. First, participants of the symposium talked about the standardization of the Batched BLAS API, as well as the next-generation BLAS to support reproducibility and extended precisions. Next, libraries from different vendors and research groups that provide optimized Batched BLAS routines on different hardware architectures were discussed. Finally, several scientific applications where optimized Batched BLAS have a significant impact as critical building blocks were described. In addition to hosting the symposium, Ahmad also participated by giving a talk about the developments of Batched BLAS within ICL’s MAGMA library, called “Batched BLAS Moving Forward in MAGMA.”

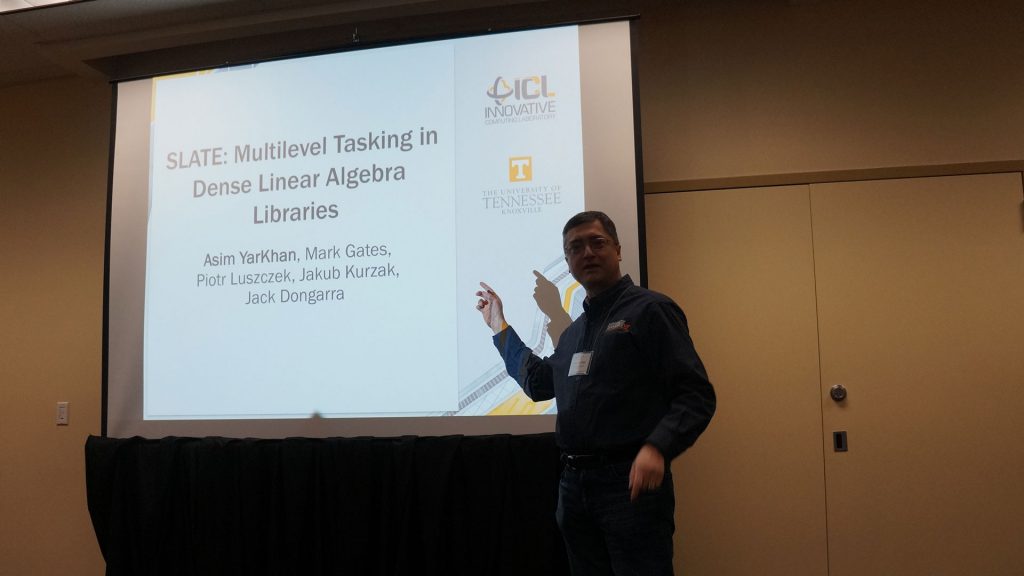

In the “Task-Based Programming for Scientific Computing: Linear Algebra Applications” mini symposium, Asim YarKhan discussed the SLATE project’s use of dynamic task scheduling using OpenMP in his presentation called, “SLATE: Multilevel Tasking in Dense Linear Algebra Libraries.”

Unsurprisingly, fault tolerance enthusiast Aurelien Bouteiller participated in the “Resilience for Large-Scale CSE Applications” mini symposium (for which George Bosilca served as co-organizer) and presented ICL’s work on extending the User-Level Failure Mitigation approach to modular application design.

George Bosilca presented in the “Task-Based Programming for Scientific Computing: Runtime Support” mini symposium. His talk, “Dynamic Algorithms with PaRSEC,” described an extension to the PaRSEC task-based runtime that can provide users with a domain-specific language to enable the dynamic description of dataflow—an approach to improve the performance portability and maintainability of code.

In ICL’s continued assault on SIAM CSE, Ichitaro Yamazaki presented collaborative work on “Distributed Memory Lattice H-Matrix Factorization,” where he described the lattice H-matrix format—a generalization of the block low–rank (BLR) format that stores each of the matrix blocks (lattices) in an H-matrix format. The aim is to obtain the parallel scalability of BLR along with the linear complexity of H-matrices—thereby reducing the cost of factorization and improving factorization performance.

Jack was also on hand to give a presentation in the “Mitigating Communication Costs using Variable Precision Computing Techniques” mini symposium. Jack’s talk, “Experiments with Mixed Precisions Algorithms in Linear Algebra,” describes the MAGMA team’s (and Azzam Haidar’s [NVIDIA]) work on using low-precision floating-point arithmetic (FP16) on NVIDIA GPU Tensor Cores to speed up linear solvers. Adding FP16 (half) to the more traditional FP32 (full) and FP64 (double) precision methods results in significant performance increases when offloading FP16 matrix operations to the Tensor Core. This mixed-precision approach can accelerate real-world applications by up to a factor of 4×, with an energy savings of about 5× over traditional FP64, all while achieving the same accuracy as the FP64 computation.

In the “State-of-the-Art Autotuning” mini symposium, Jakub Kurzak—ICL’s autotuner-in-chief—presented new developments in the BONSAI project in “The Big Data Approach to Autotuning of GPU Kernels using the BONSAI toolset.” GPU kernels are commonly produced through automated software tuning, and BONSAI aims to produce a software infrastructure for large performance tuning sweeps across many GPUs. Currently, BONSAI provides two components to facilitate this objective: (1) the LANguage for Autotuning Infrastructure (LANAI) to generate and prune a large search space and (2) the Distributed ENvironment for Autotuning using Large Infrastructure (DENALI) to launch large tuning sweeps on a massive number of GPUs. Jakub’s talk provided a brief introduction of LANAI, which is an older BONSAI component, and a more detailed discussion of DENALI, which was implemented more recently.

Jamie Finney made his conference debut in the “mini symposterium” by presenting “SLATE: Developing Sustainable Linear Algebra Software for Exascale.” SLATE aims to be a sustainable replacement for Sca/LAPACK at exascale by: (1) applying contemporary software engineering techniques in hosting, development, documentation, automated testing, and continuous integration, (2) creating communication channels with academic and commercial application teams to actively engage the larger HPC community, and (3) using modern tools that enable these practices and techniques.

Mark Gates also presented in the Batched BLAS mini symposium (hosted by Ahmad and Stan), where he, succinctly, made “A Proposal for Next-Generation BLAS.” Working with frequent ICL collaborator Jim Demmel and others, Mark laid out plans for a next-generation BLAS API, with an easy-to-use, high-level interface, for both C++ and Fortran, and a low-level naming scheme at the library level. This new API is extensible and supports a variety of data types, extended precision, mixed precision, and reproducible computations while also improving error handling and propagation of nan and inf values.

Piotr Luszczek served as co-organizer for the “C++ in Computational Science Libraries and Applications” mini symposium in which he also presented ICL’s work on “Interfacing Dense Linear Algebra Libraries in C++.” As the title indicates, Piotr’s presentation outlines recent advances of the ISO C++ standard that enable the development of modern interfaces and added features to BLAS and LAPACK and their customized implementations. This includes the use of templates, structured binding, and range-based for-loops—all of which enable succinct expression of common functionality and data access patterns to expand the applicability of legacy APIs while maintaining the portability and performance of the new interface. For example, the C++ BLAS and LAPACK interfaces are already available in SLATE.

With no bears to wrangle or Forbes articles to crash, Stan “the Man” Tomov flew to Spokane where he presented “A Novel Approach to using Fused Batched BLAS to Accelerate High-Order Discretizations” in the Batched BLAS mini symposium. Stan outlined efforts to develop device (GPU) interfaces for Batched BLAS that can be used to construct custom, application-specific, high-order operator assembly/evaluation routines. This improves code readability; enables low-level optimizations to be offloaded to libraries with consistent interfaces (e.g., Batched BLAS), thereby providing performance portability; and simplifies the custom generation of new batched routines that minimize data movement. This approach is featured in the MAGMA backend for the CEED API library.

Last, but in no way least, Yaohung “Mike” Tsai presented “From Matrix Multiplication to Deep Learning: Are We Tuning the Same Kernel?” in the “Black-Box Optimization for Autotuning” mini symposium. Mike’s presentation describes the commonalities between neural layers in deep neural networks for image recognition and between direct convolution and a matrix-multiplication (GEMM) kernel. These similarities mean that autotuning can be used for both, and Mike et al. can correlate them along multiple axes—with performance being the most prominent one.

In addition to the gaggle of ICLers mentioned above, there were many more familiar faces at SIAM CSE 19, including Hartwig Anzt, Mawussi Zounon, Emmanuel Agullo, Marc Baboulin, Stephen Wood, Hatem Ltaief (thanks for the dates!), Pierre Blanchard, and Mathieu Faverge—among many others.

The editor would like to thank Ahmad, Azzam, Hartwig, Jakub, Jamie, Piotr, and Stan for their contributions to this article.

BDEC2: Kobe

The most recent Big Data and Extreme-Scale Computing2 (BDEC2) workshop was held on February 19–21 in Kobe, Japan and focused on the need for a new cyberinfrastructure platform to connect the HPC resources required for intense data analysis, deep learning, and neural networks to the vast amount of data generators that are rarely co-located with facilities capable of that scale of computing.

Hosted by the RIKEN Center for Computational Science in Kobe, BDEC2’s 70+ participants included application leaders confronting diverse, big-data challenges, alongside members from industry, academia, and government, with expertise in algorithms, computer system architecture, operating systems, workflow middleware, compilers, libraries, languages, and applications.

This workshop was the latest installment in a series of meetings in which eminent representatives of the scientific computing community are endeavoring to map out the ways in which big-data challenges are changing the traditional cyberinfrastructure paradigm. The BDEC2 working groups were faced with seven questions, the possible answers to which will be outlined in a collaborative document that is underway:

- What innovation is required to address our new machine learning–everywhere world; how will model sharing and reuse change our platforms?

- How should commercial (cloud) platform providers be integrated into the vision/plan?

- How can we achieve an abstract programming model that can run across the digital continuum?

- What are the (minimal) services required—edge-to-cloud? Cloud-to-edge?

- What enhancements or changes are needed to make HPC systems fit as seamlessly as possible into to this picture?

- How will we compose (and share) resources across the continuum?

- What do we need to reinvent from scratch (and throw away the old way)?

The ICL team played a prominent role in this meeting, with Jack Dongarra serving as co-chair, Terry Moore acting as a key BDEC conductor, David Rogers designing the meeting’s website and logo, and Tracy Rafferty and Sam Crawford coordinating and providing additional support on site.

For more information on the BDEC effort, please check out the most recent BDEC report, “Pathways to Convergence: Towards a Shaping Strategy for a Future Software and Data Ecosystem for Scientific Inquiry.”

The next BDEC2 meeting is slated for May 15–16, 2019 in Poznań, Poland.

The editor would like to thank Tracy Rafferty, Terry Moore, and Jack Dongarra for their contributions to this article—and for bringing him along.

Recent Releases

PAPI 5.7 Released

PAPI 5.7 is now available. This release includes a new component, called “pcp,” which interfaces to the Performance Co-Pilot (PCP) and enables PAPI users to monitor IBM POWER9 hardware performance events, particularly shared “NEST” events, without root access.

This release also upgrades the (until now read-only) PAPI “nvml” component with write access to the information and controls exposed via the NVIDIA Management Library. The PAPI “nvml” component now supports measuring power usage and capping power usage on recent NVIDIA GPU architectures (e.g., V100).

The PAPI team has also added power monitoring as well as PMU support for recent Intel architectures, including Cascade Lake, Kaby Lake, Skylake, and Knights Mill (KNM). Furthermore, measuring power usage for AMD Fam17h chips is now available via the “rapl” component.

For specific and detailed information on changes made for this release, see ChangeLogP570.txt. For filenames, keywords of interest, and change summaries, go directly to the PAPI git repository.

Some major changes for PAPI 5.7 include:

- Added the component PCP (Performance Co-Pilot, IBM) which enables access to PCP events via the PAPI interface;

- Added support for IBM POWER9 processors;

- Added power monitoring support for AMD Fam17h architectures via RAPL;

- Added power capping support for NVIDIA GPUs;

- Added benchmarks and testing for the “nvml” component, which enables power-management (reporting and setting) for NVIDIA GPUs;

- Re-implemented the “cuda” component to better handle GPU events, metrics (values computed from multiple events), and NVLink events, each of which have different handling requirements and may require separate read groupings;

- Enhanced NVLink support and added additional tests and example code for NVLink (high-speed GPU interconnect); and

- Extended the test suite with more advanced testing:

attach_cpu_sys_validate,attach_cpu_validate,event_destroytest,openmp.Ftest, andattach_validatetest (rdpmc issue).

Other changes include:

- ARM64 configuration now works with newer Linux kernels (≥3.19);

- As part of the “cuda” component, expanded CUPTI-only tests to distinguish between PAPI or non-PAPI issues with NVIDIA events and metrics;

- Corrected many memory leaks, though some 3rd party library codes still exhibit memory leaks;

- Added better reporting and error handling for bugs and made changes to “infiniband_umad” name reporting to distinguish it from the “infiniband” component; and

- Cleaned up source code, added documentation and test/utility files.

Acknowledgments: This release was made possible by the efforts of many contributors. The PAPI team would like to express special thanks to Vince Weaver, Stephane Eranian (for libpfm4), William Cohen, Steve Kaufmann, Phil Mucci, and Konstantin Stefanov.

Click here to download the tarball.

Interview

Jiali Li

Where are you from, originally?

I am from Guiyang City, which is the capital of the Guizhou Province in China. Guiyang is in the southwest of China—on the eastern side of the Yungui Plateau.

Can you summarize your educational background?

I earned my bachelor’s degree in Electrical Engineering from Dalian Maritime University, China, in 2016. After that, I started my PhD studies in Computer Engineering at the University of Tennessee in 2017. I changed my major to Computer Science and joined ICL in June 2017.

How did you first hear about ICL, and what made you want to work here?

I had heard of Oak Ridge National Laboratory (ORNL) before I came to the United States. Upon learning more about what they do at ORNL, I learned about computational science and supercomputing, and—eventually—I learned about the TOP500 list, Prof. Dongarra, and ICL.

What is your focus here? What are you working on?

My research work focuses on developing a toolkit that can automatically categorize vast hardware counters based on their performance characteristics and create the corresponding mapping from actual characteristics to ambiguous hardware events.

What would you consider your most valuable “lesson” you have learned so far at ICL?

ICL provides plenty of great opportunities and benefits for students and research scientists who work here. Nearly every Friday, we have a lunch seminar, and ICL will invite a speaker to give a presentation. These presentations are a good opportunity to learn more about HPC from the perspectives of our different research groups/areas. The lunch gathering also provides an opportunity to communicate with the other researchers and possibly work together on something new.

What are your interests/hobbies outside of work?

I enjoy outdoor sports in my spare time. For most of the year, the weather in Knoxville is very mild and suitable for outdoor running and cycling, and the Smoky Mountains provide a good place for hiking.

Tell us something about yourself that might surprise people.

I have participated in a marathon, and I will participate in another marathon in March 2019.

If you weren’t working at ICL, where would you like to be working and why?

I love challenging problems and would like to devote myself to scientific research work. If I was not working at ICL, I may still work as a research assistant at a academic research group in either computer science or engineering.